- unwind ai

- Posts

- RAG Workbench to Evaluate LLM Apps

RAG Workbench to Evaluate LLM Apps

PLUS: Microsoft's new vision language model, OpenAI's first acquisition

Today’s top AI Highlights:

Evaluate your RAG app with personalized evaluation models

Microsoft releases new vision language models

Open-weight function-calling model with GPT-4o quality

OpenAI makes first acquisition, snapping up analytics company Rockset

Automate browser-based workflows with LLMs and computer vision

& so much more!

Read time: 3 mins

Latest Developments 🌍

LastMile AI has just released RAG Workbench in beta to help developers evaluate, debug, and optimize their Retrieval Augmented Generation (RAG) applications. This tool is a game changer for anyone working with RAG, providing deeper insights into your RAG app’s performance and offering a rich debugging experience.

The best part is it allows you to tailor your evaluations to your specific needs by using your own data to fine-tune evaluators. This ensures you’re measuring what really matters, not just generic metrics.

Key Highlights:

Distributed Tracing - RAG Workbench goes beyond just tracing your LLM; it also traces both retrieval and data ingestion pipelines, offering a comprehensive view of your entire RAG system.

Fine-Tuned Evaluators - The tool comes with pre-built, highly accurate evaluator models specifically designed for RAG applications. These models are significantly more cost-effective and perform better than generic solutions.

Debugging & Experimentation - RAG Workbench provides interactive debugging tools to inspect retrieved context, rerun prompts, and track your RAG application’s activity. This lets you quickly pinpoint and fix problems.

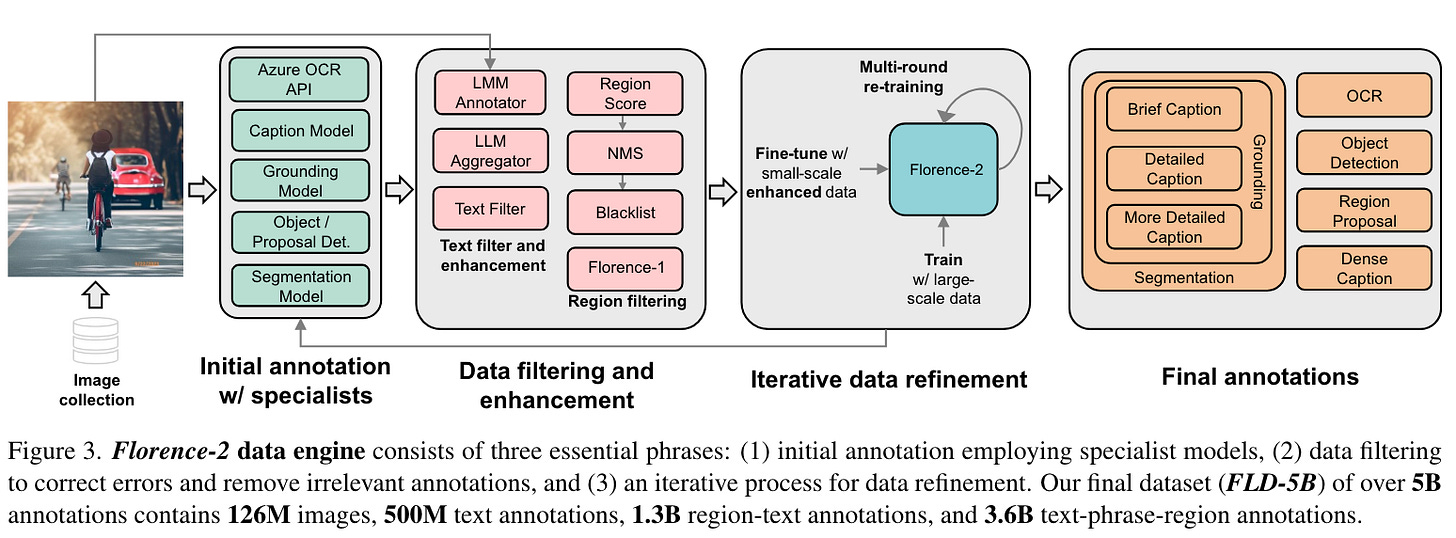

Microsoft has released Florence-2, a new vision foundation model that can handle a wide range of computer vision and vision-language tasks. Unlike many existing models, Florence-2 can tackle these tasks with simple instructions and understand complex visual information. This was possible due to a massive dataset called FLD-5B, which contains 5.4 billion visual annotations on 126 million images. Microsoft has made Florence-2 models available to the public, so researchers and developers can explore its capabilities.

Key Highlights:

Unified Prompt-Based Approach - Florence-2 can perform various vision tasks like captioning, object detection, and grounding using only text prompts. This eliminates the need for task-specific fine-tuning and makes the model very versatile.

Large-Scale Annotated Data - The FLD-5B dataset was created using an iterative process of automated image annotation and model refinement. This dataset is vital for training a model as capable as Florence-2.

Unprecedented Zero-Shot Performance - Florence-2 demonstrates impressive zero-shot capabilities across various visual tasks, outperforming many other models with more parameters.

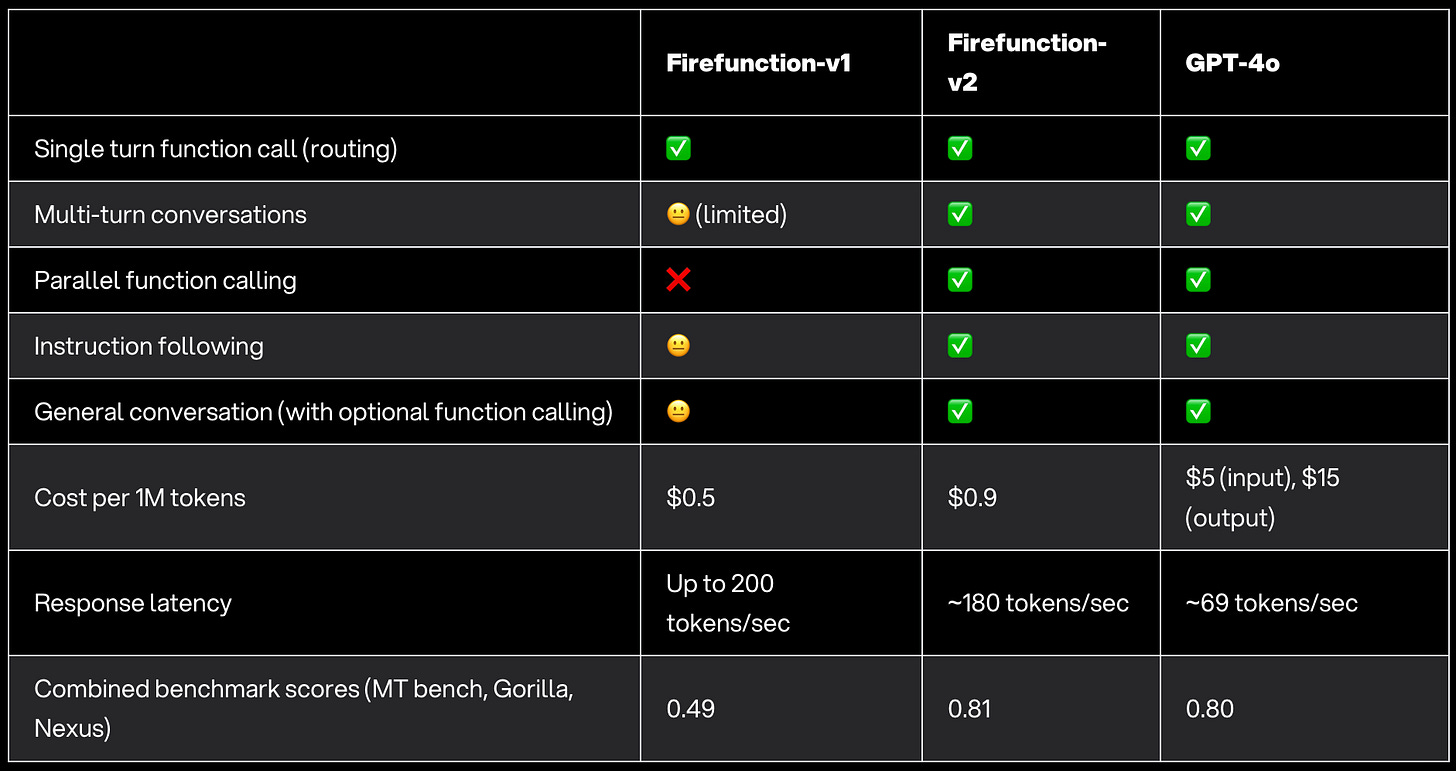

Fireworks has released Firefunction-v2, a new open-source function calling model that boasts impressive performance and affordability. This model can handle complex real-world scenarios involving multiple conversations, instructions, and parallel function calls. Firefunction-v2 achieves performance on par with GPT-4o, but at a fraction of the cost and with significantly faster speeds. If you’re looking for a powerful and cost-effective function calling model, Firefunction-v2 is worth a closer look.

Key Highlights:

Performance - Firefunction-v2 performs comparably to GPT-4o on public benchmarks, scoring 0.81, while costing only $0.9 per output token compared to GPT-4o’s $15 per output token. This significant cost difference makes Firefunction-v2 a much more practical choice for developers and businesses.

Capabilities - Firefunction-v2 can handle parallel function calling, allowing it to execute multiple functions from a single query. This is a significant advantage for real-world use cases where users expect a more intuitive and efficient experience.

Architecture - Firefunction-v2 is built on the Llama 3 70B model, retaining its excellent chat and instruction following capabilities. This means that Firefunction-v2 can be used in a wider range of applications, including chatbots, virtual assistants, and other AI-powered tools.

OpenAI has made its first acquisition, acquiring enterprise analytics startup Rockset. OpenAI will integrate Rockset’s technology into its products to enhance its retrieval infrastructure for OpenAI’s product suite, including ChatGPT. OpenAI will also be incorporating Rockset’s team into its own, marking the first time the company has integrated both technology and personnel from an acquired company.

Rockset’s CEO, Venkat Venkataramani, stated that the company will gradually transition its current customers off the platform and that some members of its team will be joining OpenAI. The financial terms of the acquisition were not disclosed.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Skyvern: An open-source AI tool that automates browser-based workflows, making it easy to perform repetitive tasks on multiple websites. You can use it to automate processes like downloading invoices, filling out forms, and placing orders via a simple API.

Trelent: A secure AI chat interface with zero data retention so you can use models like Claude 3.5 and GPT-4o without compromising your data. It serves as a drop-in replacement for ChatGPT, providing secure data integrations and enterprise access control.

Vizly: AI-powered data scientist that lets you chat with your data, create interactive visualizations, and perform complex analyses. You can upload your data in CSV, Excel, or JSON formats, and immediately uncover actionable insights and generate predictive reports.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

If you have a problem you're trying to solve with an LLM – now you have TWO problems. ~

Les GuessingI continue to be amazed how many software engineers have not taken the bare minimum effort to understand how LLMs work, and assume things like “it can reason.”

A trait of any kind of engineering is you look under the covers of the stuff you use, understand how it works and why. ~

Gergely Orosz

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: We curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button!

Reply