- unwind ai

- Posts

- Mistral Large 2 beats Llama 3.1 405B in Coding

Mistral Large 2 beats Llama 3.1 405B in Coding

PLUS: OpenAI down $5 billion with 12 months of cash in hand, Cohere's new Rerank model

Today’s top AI Highlights:

Mistral AI’s new flagship model Mistral Large 2

Cohere’s new Rerank model for faster enterprise search and RAG

OpenAI could lose $5 billion this year and run out of cash in 12 months

Stability AI’s new model generates multiple novel-view videos from a single object video

Tools for running Llama 3.1 models locally (100% free and without internet)

& so much more!

Read time: 3 mins

Latest Developments 🌍

Mistral AI is on a release spree. They have released their new flagship model, Mistral Large 2 with 123B parameters. This model surpasses its predecessor in code generation, mathematics, and reasoning, boasting stronger multilingual support and advanced function calling. Mistral Large 2 is designed for single-node inference with a 128k context window. Notable, it achieves 84% on MMLU, setting a new standard in terms of performance/cost of serving.

Key Highlights:

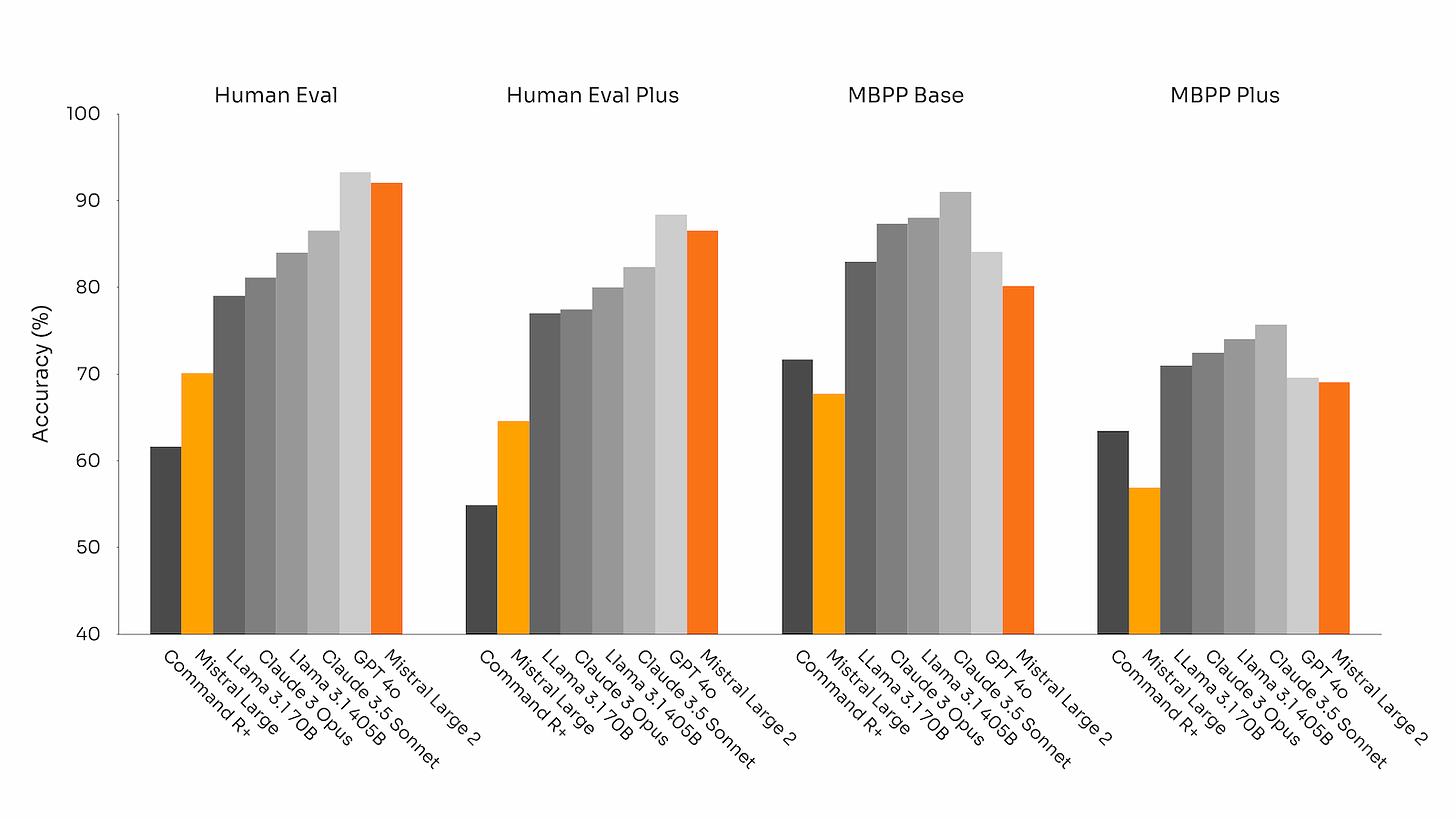

Coding - Mistral Large 2 is trained on a very large proportion of code. It outperforms much larger models including CLaude 3.5 Sonnet and Llama 3.1 405B, and matches the performance of GPT-4o on HumanEval and MultiPL-E benchmarks.

Less Hallucination - To minimize hallucination, the model was fine-tuned to be more cautious and discerning in its responses. It is also trained to acknowledge when it cannot find solutions or does not have sufficient information to provide a confident answer.

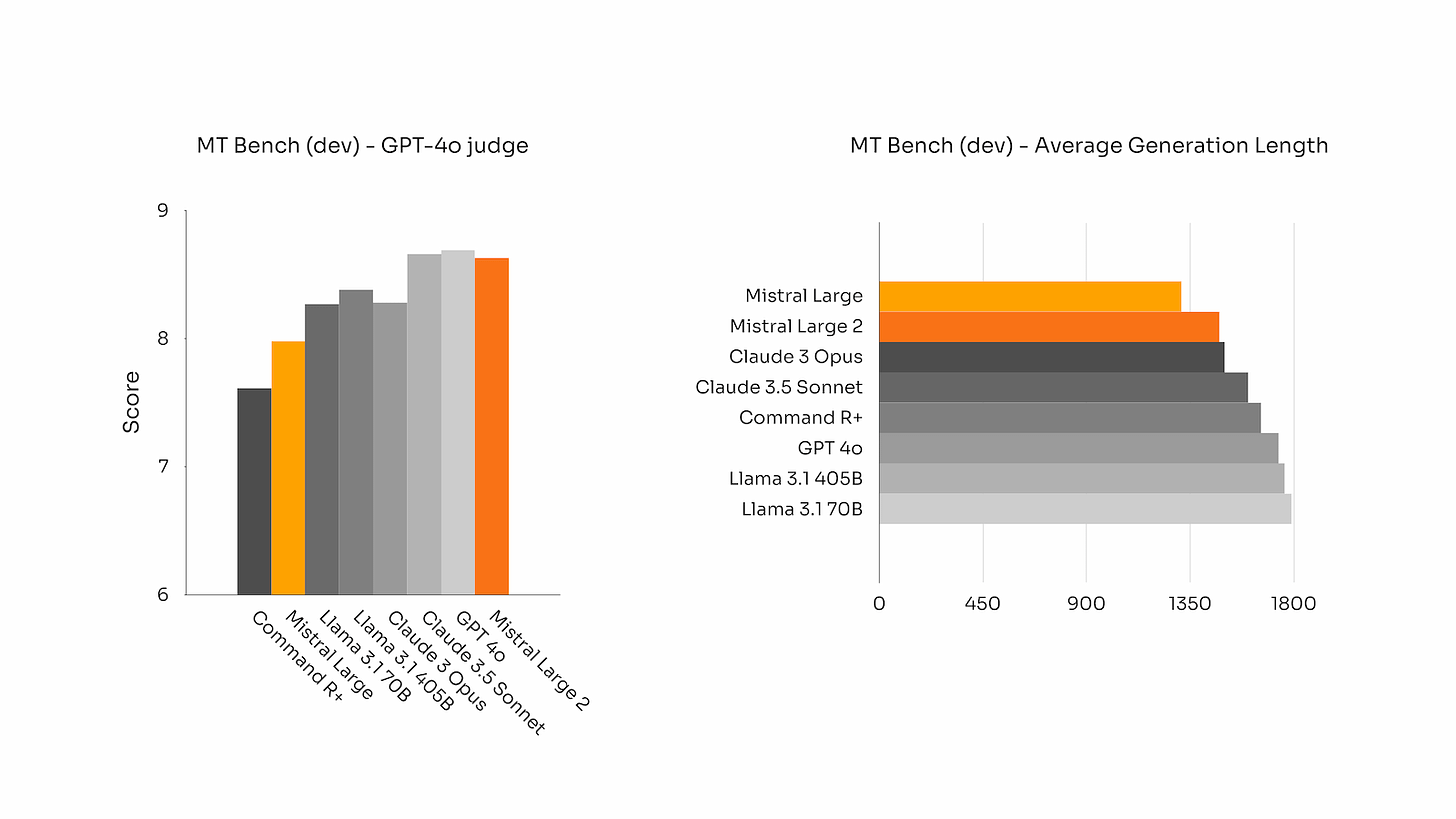

Instruction Following - Mistral Large 2 shows significant improvement in instruction following, conversational ability, and generating concise and succinct responses, crucial for business applications.

Multilingual - Mistral Large 2 excels in multiple languages, including French, German, Spanish, Dutch, Russian, Chinese, Japanese, Hindi, and more. It outperforms Cohere’s Command R+ and Llama 3.1 70B on Multilingual MMLU.

Function calling - Mistral Large 2 is equipped with enhanced function calling and retrieval skills. and can proficiently execute both parallel and sequential function calls, making it ideal for complex business applications.

Availability - Mistral Large 2 is available to chat and fine-tune on la Plateforme, and via API. Weights for the instruct model are also available on Mistral’s website and HuggingFace.

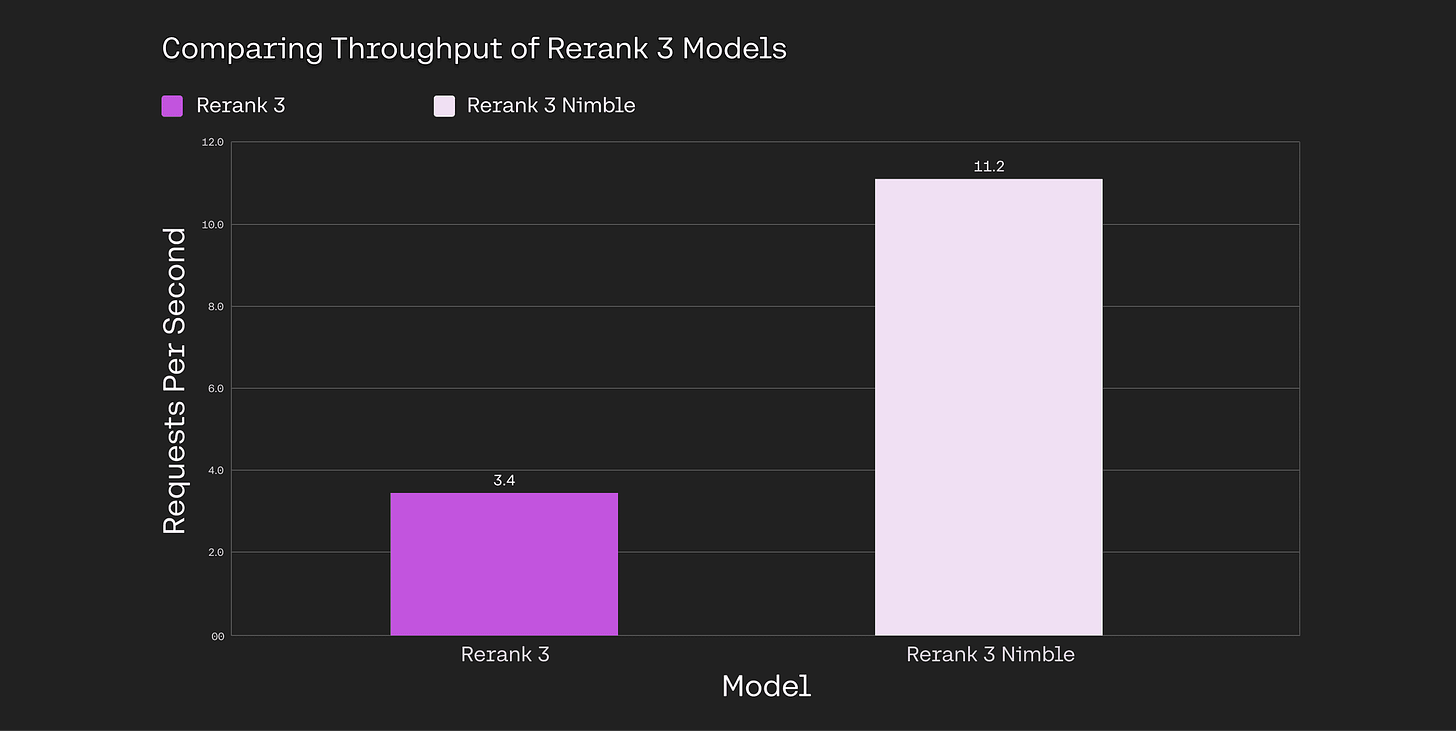

Cohere has released Rerank 3 Nimble, its newest foundation model in the Cohere Rerank model series, to enhance enterprise search and RAG systems. This model is 3x faster than its predecessor, Rerank 3, while maintaining a high level of accuracy, making it ideal for high-volume applications.

Rerank 3 Nimble reranks very long documents and processes multi-aspect and semi-structured data for user queries. This enhances existing search systems and the efficiency of RAG systems.

Key Highlights:

Improved RAG Efficiency - By integrating Rerank 3 Nimble into RAG systems, developers can ensure that only the most relevant documents are passed to the generative language model. This results in more focused content generation and reduced computational costs.

Reduced Latency - Rerank 3 Nimble offers a 3x increase in throughput compared to Rerank 3, resulting in significantly faster response times for search queries.

Integration with Existing Systems - It can be implemented with just a few lines of code, and easily integrated with existing search systems, whether they utilize traditional algorithms like BM25 or more advanced techniques.

Multilingual Support and Data Versatility - The model supports over 100 languages. It also excels at understanding and reranking complex documents, including multi-aspect data like emails and semi-structured data like JSON and tabular formats.

Availability - Rerank 3 Nimble is only available on Amazon SageMaker and for on-premise deployments. It is priced the same as existing Rerank 3 models.

Quick Bites 🤌

As per a report, OpenAI is projected to lose $5 billion this year and may run out of cash in 12 months unless they raise more funds. Their expenses, including $7 billion for training and inference and $1.5 billion in workforce costs, far exceeds their revenue of $2 billion from ChatGPT.

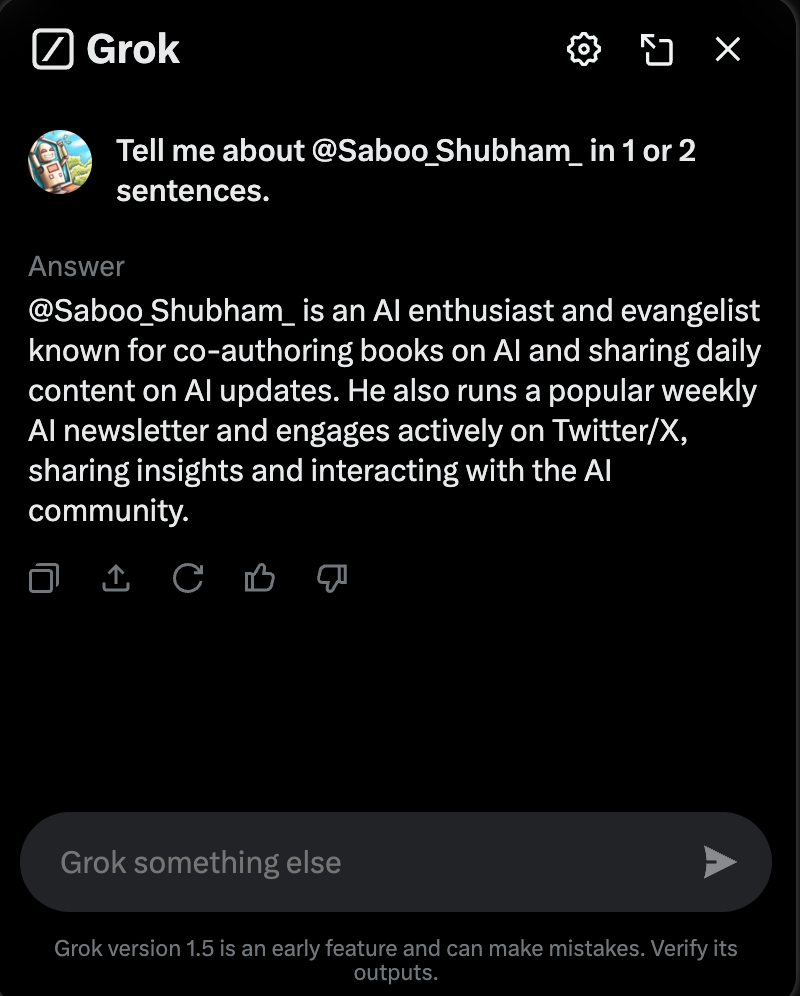

X has launched “More About This Account” feature using Grok AI, for paid users, that provides AI-generated summaries about an X handle when a user hovers over the handle or display name.

OpenAI-backed AI-powered legal tech startup Harvey has raised $100 million in a Series C round led by Google Ventures, bringing its total funding to $206 million and valuing the company at $1.5 billion. The new funds will be used to enhance AI models, expand services globally, and grow its team.

Stability AI has released Stable Video 4D which generates dynamic multi-angle videos from a single object video, offering eight different views in about 40 seconds. It’s currently in the research phase and also available on Hugging Face.

Converts a single object video into multiple novel-view videos from eight different angles.

Generates 5 frames across 8 views in about 40 seconds.

Users can specify camera angles for tailored creative outputs.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Run Llama 3.1 models locally on your computer (100% free and without internet) with the following tools:

Ollama - An opensource project that provides an easy way to download and run models like Llama 3.1 easily on regular PCs.

LM Studio - A desktop application that lets you run opensource LLMs offline on your computer.

GPT4All - An open-source project that provides tools and software to run powerful LLMs on your computer, without expensive GPU hardware or cloud services.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

A co-worker once said:

“People shouldn’t learn how to code - they should learn how to solve problems.”

He was then fired a month later for being horrible at programming. ~

Jack Forgebeing afraid of existential risk from ai progress is prudent and advisable and if you reflexively started making fun of this viewpoint in the last

~two years after ai entered your radar you need to self reflect ~

rooncalling it now:

harvey (the legal AI co) will end up being roadkill on the side of the highway. complete smoke and mirrors company. ~

emily is in sf

Meme of the Day 🤡

Getting all your apps to finally work with CUDA.

— Bojan Tunguz (@tunguz)

1:25 AM • Jul 21, 2024

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: We curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

Reply