- unwind ai

- Posts

- Mistral 7B beats GPT-4o with RAG Fine-tuning

Mistral 7B beats GPT-4o with RAG Fine-tuning

PLUS: ByteDance and Broadcom's AI chip, OpenAI's new acquisition

Today’s top AI Highlights:

Building personal AI code assistant with opensource LLM that outperforms GPT-4o and Claude 3 Opus

ByteDance is partnering with Broadcom to build advanced AI chip

Sakana AI uses an LLM to invent better ways to train an LLM

OpenAI buys video remote collaboration startup Multi

Open source alternative to Perplexity AI

& so much more!

Read time: 3 mins

Latest Developments 🌍

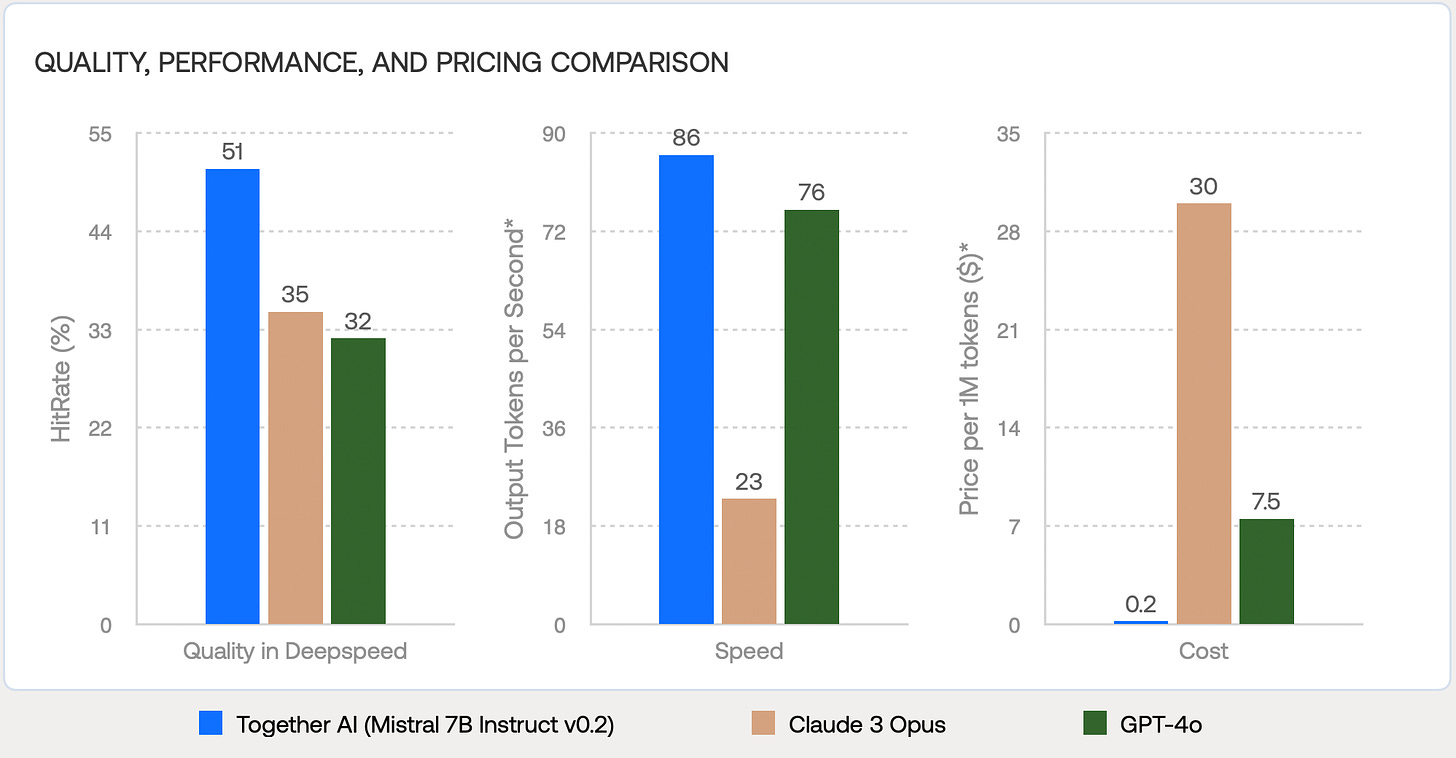

Together AI team has built a new personalized code assistant using Mistral 7B Instruct v0.2, an open-source LLMs and Retrieval-Augmented Generation (RAG) fine-tuning. This innovative approach tackles the shortcomings of LLMs in code generation, such as producing inaccurate or outdated code. RAG fine-tuned models demonstrated significant performance improvements over existing models like Claude 3 Opus and GPT-4o, while achieving a significant cost reuction

Key Highlights:

Substantial Accuracy Gains: The RAG fine-tuned models achieved up to 16% better accuracy than Claude 3 Opus and up to 19% improvement over GPT-4o when generating code.

Dramatic Speed and Cost Reductions: The fine-tuned models offered a significant speed boost (3.7x faster than Claude 3 Opus and 1.1x faster than GPT-4o), as well as a dramatic cost reduction (150x cheaper than Claude 3 Opus and 37.5x cheaper than GPT-4o).

Up-to-Date Code Generation: The RAG fine-tuning method integrated relevant code snippets from specific code repositories into the model's training process, ensuring generated code is aligned with the latest coding standards.

Practical Tool for Developers: This new approach provides developers with a more practical and valuable tool for generating accurate and contextually appropriate code, addressing the limitations of traditional LLMs.

ByteDance, the company behind TikTok, is collaborating with U.S. chipmaker Broadcom to develop a cutting-edge AI processor. This move is significant to secure a stable supply of high-end chips for ByteDance amidst ongoing U.S.-China tensions. The 5-nanometer chip, a custom-designed ASIC, will comply with U.S. export restrictions and will be manufactured by TSMC in Taiwan.

Key Highlights:

Strategic partnership - The partnership between ByteDance and Broadcom, existing business partners, will help ByteDance cut procurement costs and ensure access to advanced chips.

Chip specifications - The new chip, designed to be compliant with U.S. export regulations, will be manufactured using TSMC’s 5-nanometer process, signifying a significant leap in AI chip technology.

AI ambitions - ByteDance’s AI ambitions are evident in its development of Doubao, a ChatGPT-like chatbot service, and its acquisition of Nvidia and Huawei chips. The company's focus on AI is a key driver behind its collaboration with Broadcom.

Generated with DALL.E-3

Researchers at Sakana AI have successfully used LLMs to invent better ways to train themselves to automate AI research. This innovative approach, dubbed ‘LLM²,’ leverages LLMs to generate hypotheses and write code, effectively turning them into AI researchers. In a recent open-source project, researchers prompted LLMs to propose and refine new preference optimization algorithms which resulted in a new state-of-the-art algorithm called DiscoPOP, significantly outperforming existing methods in aligning LLMs with human preferences.

Key Highlights:

LLM-Driven Discovery Process: The study focused on the potential of LLMs to discover new preference optimization algorithms. The researchers devised a three-step process: prompting the LLM with a task, having the LLM generate a hypothesis and code for a new method, and then evaluating the method’s performance.

Evolutionary Approach: The researchers employed an evolutionary LLM approach, where the LLM acts as a code-level mutation operator. This process involves prompting the LLM with a task and problem description, letting it generate code for a new method, and then using the code to evaluate the method’s performance. This iterative process allows the LLM to refine its proposals and discover new and improved algorithms.

DiscoPOP: This new algorithm was discovered by an LLM and surpasses the performance of existing human-designed algorithms, achieving state-of-the-art results in aligning LLMs with human preferences.

OpenAI has made its second acquisition. Multi is a startup that specializes in video-first collaboration platforms. The deal, an acqui-hire, brings Multi’s team of five employees to OpenAI.

Multi’s platform, designed for remote teams, allows for shared screen viewing, customized shortcuts, and deep links for documents. The company, which raised $13 million in funding, will shut down on July 24.

The most likely scenario is integrating Multi’s video capabilities into ChatGPT. This could allow users to have video-based conversations with the AI to make it more engaging and interactive.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

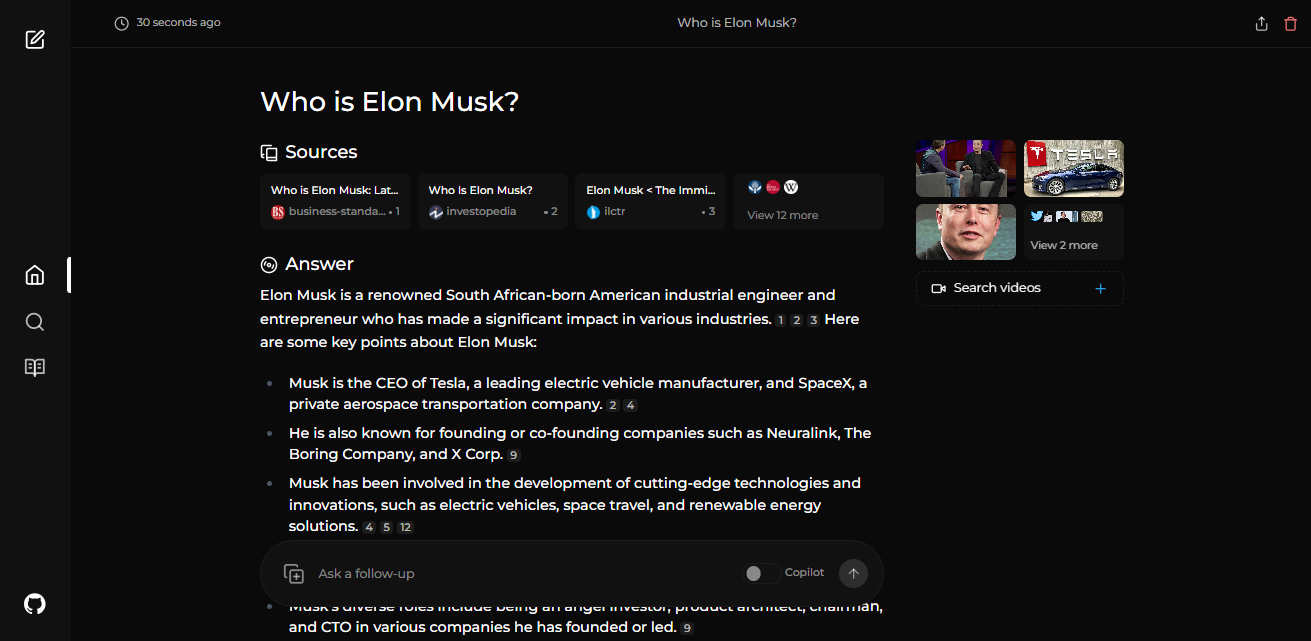

Perplexica: Open-source AI-powered search engine that provides accurate and up-to-date answers by deeply searching the internet and citing sources. It offers various focus modes for specific tasks like academic research, writing assistance, and video searches so you get relevant results tailored to your needs.

Vly AI: Build and deploy web applications without writing any code. You can launch SaaS businesses or enterprise tools quickly, with built-in scalability, security, and reliability.

FastML: Python boilerplate to help you quickly build and deploy machine learning pipelines. It includes scripts for data handling, modeling, and deployment, making it easy to integrate into your existing infrastructure.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

If you look at the trajectory of improvement, systems like GPT-3 were toddler-level intelligence and then systems like GPT-4 are like smart high schooler intelligence. In the next couple of years we’re looking at Phd-level intelligence for specific tasks. ~

Mira MuratiThere will eventually be a big opportunity to build a password manager explicitly to share passwords with AI agents ~

Dan ShipperI bet the whole $7T media thing Sama did with the UAE was to get the US Govt to FOMO in and nationalize OpenAI.

Genius move in retrospect. Only one player can be the official Manhattan project.

My bet is every big player now gets nationalized to compete. ~

Beff – e/acc

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: We curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

Reply