- unwind ai

- Posts

- Microsoft's Studio to Build Multi-Agent AI Workflows

Microsoft's Studio to Build Multi-Agent AI Workflows

PLUS: Share and remix Artifacts, OpenAI blocks API access in China

Today’s top AI Highlights:

Microsoft’s low-code studio for building multi-agent AI workflows

Lost in the Pages: LLMs struggle with book-length text

Create, publish, share and even remix Artifacts in Claude

OpenAI blocks its AI models in China but developers can use them via Microsoft Azure

Quora’s Poe now has an Artifacts-like feature to create custom web apps within the chat

& so much more!

Read time: 3 mins

Latest Developments 🌍

Microsoft has released AutoGen Studio, a low-code interface to create multi-agent AI applications. This new tool builds on the existing AutoGen framework for developers to build, test, and share AI agents and workflows. With AutoGen Studio, developers can create complex agent interactions with minimal coding. The goal is to make building and deploying these sophisticated AI systems accessible to everyone.

Key Highlights:

Author Agent Workflows - Users can choose from pre-defined agents or create their own, customizing them with different skills, prompts, and foundation models.

Debugging and Testing - Developers can test their workflows in real-time and observe the interactions between agents. They can analyze the “inner monologue” of the agents, review generated outputs, and get insights into performance metrics like token usage and API calls.

Reusable Components and Deployment - Users can download the skills, agents, and workflow configurations they create, and share and reuse these artifacts. They can also export workflows as APIs to deploy them in other applications or cloud services.

Addressing Real-world Problems - AutoGen Studio is already being used to prototype tasks like market research, structured data extraction, video generation, and more.

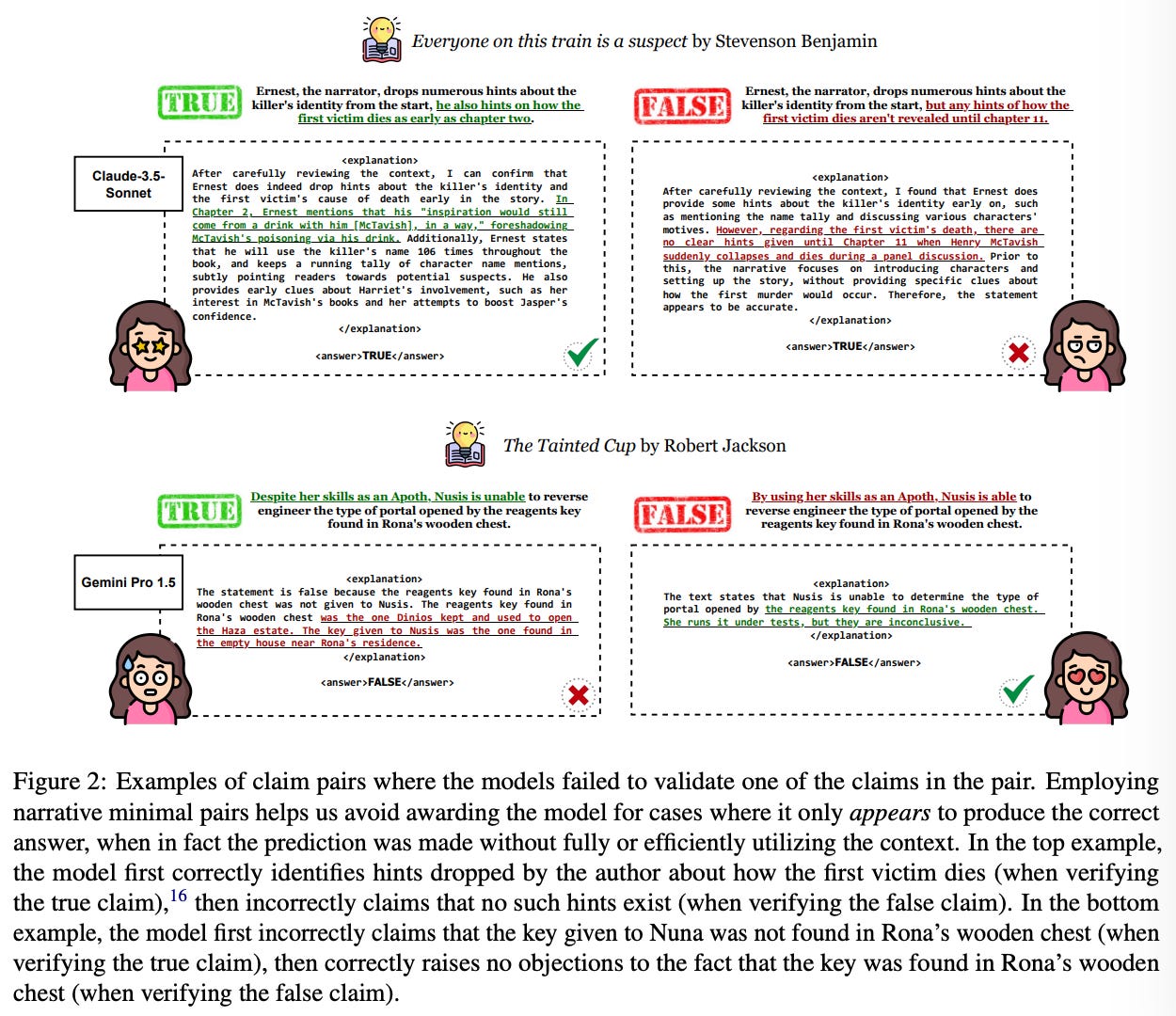

Current long-context LLMs struggle to understand and reason over information found within book-length texts. While these models may nail basic retrieval tasks like needle-in-the-haystack, they fall short when they need to synthesize information from various parts of a long document.

To address this, researchers have created NOCHA, a dataset of 1,001 true/false claim pairs about fictional books to test an LLM’s ability to comprehend and reason over lengthy narratives. NOCHA requires models to analyze the entire text, including implicit information and nuances specific to the narrative to make it more challenging and realistic. Early results show even the most advanced LLMs struggle with this level of complex reasoning.

Key Highlights:

Open-weight models perform poorly - All open-weight LLMs tested performed poorly on NOCHA. In contrast, GPT-4o achieved the highest accuracy at 55.8%, still a long way from perfect understanding.

Global reasoning poses a major hurdle - LLMs generally performed better on NOCHA pairs requiring sentence-level retrieval compared to those demanding global reasoning. There is a significant gap in their ability to connect information across large portions of text.

Model explanations are often unreliable - Even when the model’s final answer was correct, its explanations for true/false judgments were inaccurate. Models might reach the correct conclusion through faulty reasoning.

Speculative fiction proves most challenging - LLMs showed significantly lower accuracy on claims about speculative fiction compared to historical or contemporary settings. Models struggle to grasp complex, fictional worlds and rules unique to the narrative.

Quick Bites 🤌

Artifacts that appears in Claude.ai can now be published and shared with others. Not just this, you can take an Artifact shared with you in a new chat with Claude and remix it with your own unique spin! (Source)

OpenAI is blocking API access to its AI models in China, citing its policy to restrict usage in unsupported regions. But this block doesn’t apply to Microsoft. Despite this decision by OpenAI, Microsoft has confirmed that its Azure OpenAI Service, operating in China through a joint venture, will continue to offer access to AI models for its Chinese customers. (Source)

AI Pin startup Humane’s executives, Brooke Hartley Moy and Ken Kocienda, have left the company to start an AI fact-checking search company called Infactory, for enterprise clients like newsrooms and research facilities. The platform will source information directly from trusted providers to mitigate the risk of AI hallucinations. The company is currently pre-seed. (Source)

Quora’s Poe has a new feature “Previews” which lets you create, interact with, and share custom web applications generated directly in chats on Poe. Previews works particularly well with LLMs that excel at coding, including Claude 3.5 Sonnet, GPT-4o, and Gemini 1.5 Pro. You can create custom interactive experiences like games, animations, data visualizations, regardless of programming ability. (Source)

We’re excited to introduce Previews, a new feature that lets you see and interact with web applications generated directly in chats on Poe. Previews works particularly well with LLMs that excel at coding, including Claude 3.5 Sonnet, GPT-4o, and Gemini 1.5 Pro. (1/5)

— Poe (@poe_platform)

3:29 PM • Jul 8, 2024

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Ollama 0.2: This version comes with two new features. It lets you handle multiple chat sessions or run various models simultaneously, improving efficiency and multitasking. You can also load different models at the same time for tasks like RAG and running agents.

Wheebot: Create and edit landing pages within minutes using WhatsApp. Just describe your requirements in plain English, and Wheebot will generate and update your site instantly, all through secure, end-to-end encrypted chats.

BuildBetter: Uses AI to help product teams search recordings, generate call summaries, and update documentation efficiently. It cuts operational time to less than 30%, compared to the industry average of 73%.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

OpenAI blocking API access (incl. API) from China since today, new domain eu [.] api [.] openai [.] com added yesterday - sounds like the rest of the world is getting new models/API updates soon? ~

Tibor BlahoThe gap between the people who have put in the effort to figure out how to make AI work for them and those who think it's a silly stochastic parrot is winding at a pretty rapid pace. We are still early but at a certain point it's going to be next to impossible to close that gap ~

xjdrthe biggest drivers of ai pause sentiment won't be fears of x-risk but mass confusion as we cross the event horizon

when sci/tech progress outpaces our sum collective understanding, the default won't be to lay back and relax imo

even benign uncertainty is quite scary to us ~

Heraklines

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: We curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

Reply