- unwind ai

- Posts

- Meta Llama-3 405B Preview in WhatsApp

Meta Llama-3 405B Preview in WhatsApp

PLUS: Updates to Google's Vertex AI, Open LLM Leaderboard v2

Today’s top AI Highlights:

Meta rolls out Llama-3 405B model in Meta AI to select users

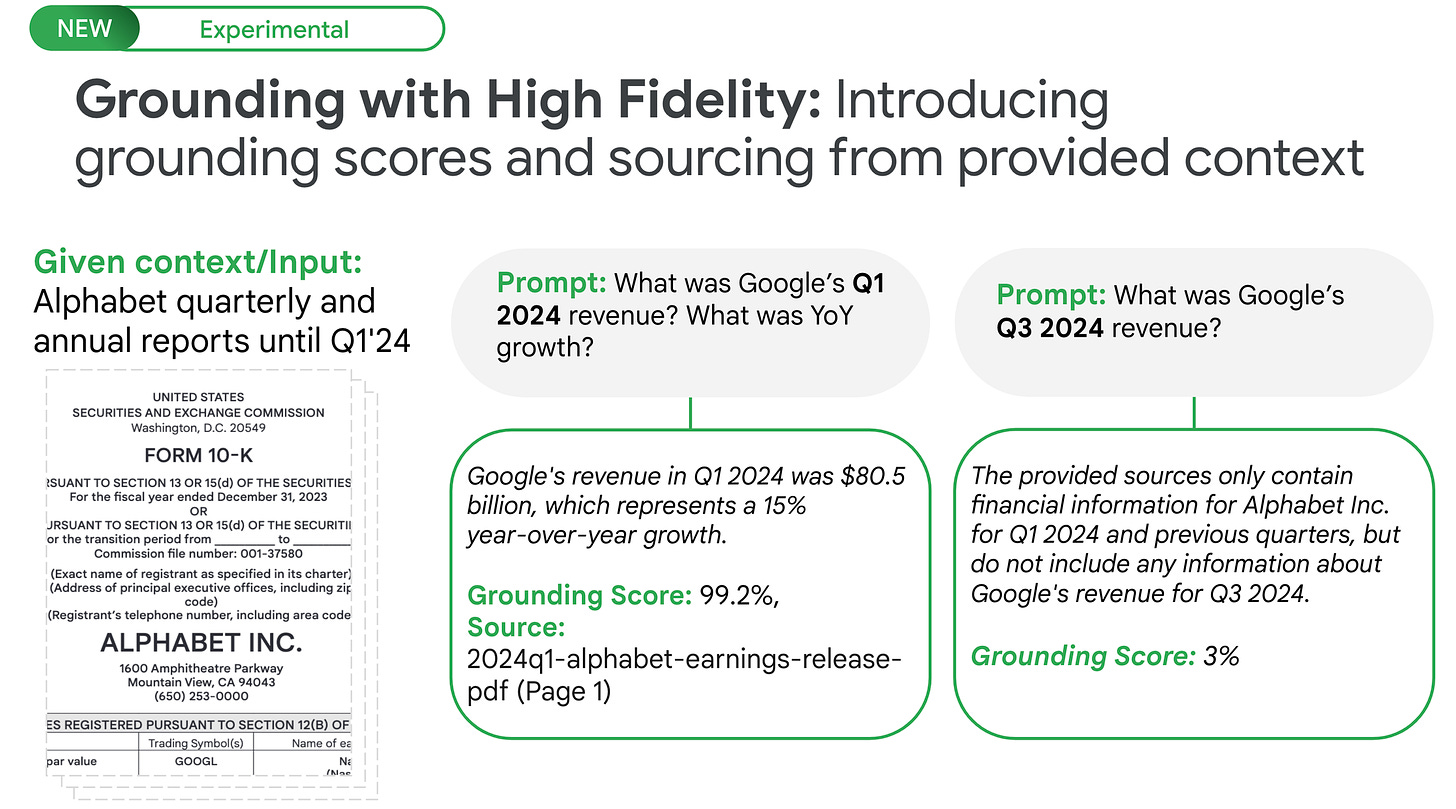

Google makes Vertex AI enterprise-ready with enhanced grounding

LangChain makes building reliable AI agents at scale a reality

Hugging Face introduces Open LLM Leaderboard v2 with new benchmarks

Run Llama-3 on someone else’s computer using the world’s largest peer-to-peer network for AI agents

& so much more!

Read time: 3 mins

Latest Developments 🌍

Google Cloud has introduced new features to its ML development platform Vertex AI to improve the accuracy and reliability of generative AI for enterprise applications. These updates address the concerns of AI adopters that LLMs do not know information outside their training data and “hallucinate” to cook up information that is convincing but inaccurate.

Key Highlights:

Dynamic Retrieval for Google Search Grounding - It will automatically determine whether to source information from Gemini’s internal knowledge base or live Google Search results.

High-Fidelity Mode - Developers can restrict AI responses to information sourced solely from their own corporate datasets.

Third-Party Datasets - Integrate specialized data from providers like Moody’s, MSCI, Thomson Reuters, and ZoomInfo into your AI applications for accurate responses in specialized domains like finance and market analysis.

Enhanced Vector Search - Vertex AI’s Vector Search, used for finding similar images, is enhanced with “hybrid search” capabilities. This combines vector-based searches with traditional keyword searches to improve the relevance of results.

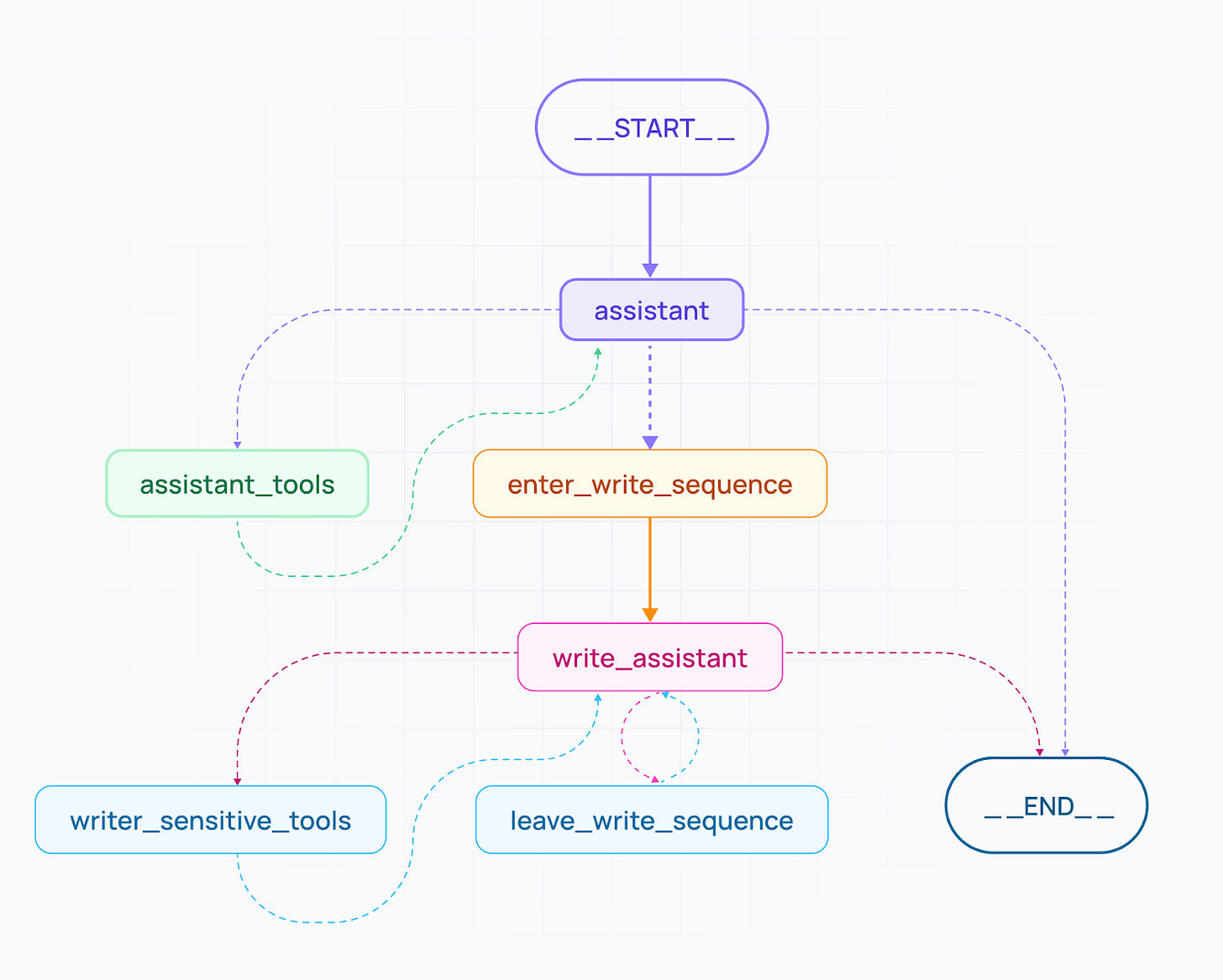

LangChain has introduced stable release of LangGraph v0.1 and LangGraph Cloud in beta, an infrastructure for scalable and reliable agent deployment. These tools address the challenges of building real-world AI applications that can reliably execute complex tasks. LangGraph and LangGraph Cloud provide developers with increased control, visibility, and scalability in their agent-based applications.

Key Highlights:

Control and Customization - New features have been added for developers to build custom cognitive architectures with more granular control over agent workflows especially required for complex tasks.

Human-Agent Collaboration - Developers can design agents to pause for human approval, edit actions before execution, and even “time travel” to inspect and modify past agent states.

Scalable Deployment - LangGraph Cloud simplifies agent deployment with a scalable and fault-tolerant infrastructure. It manages task queues, servers, and a robust checkpointer to ensure smooth operation under heavy user loads.

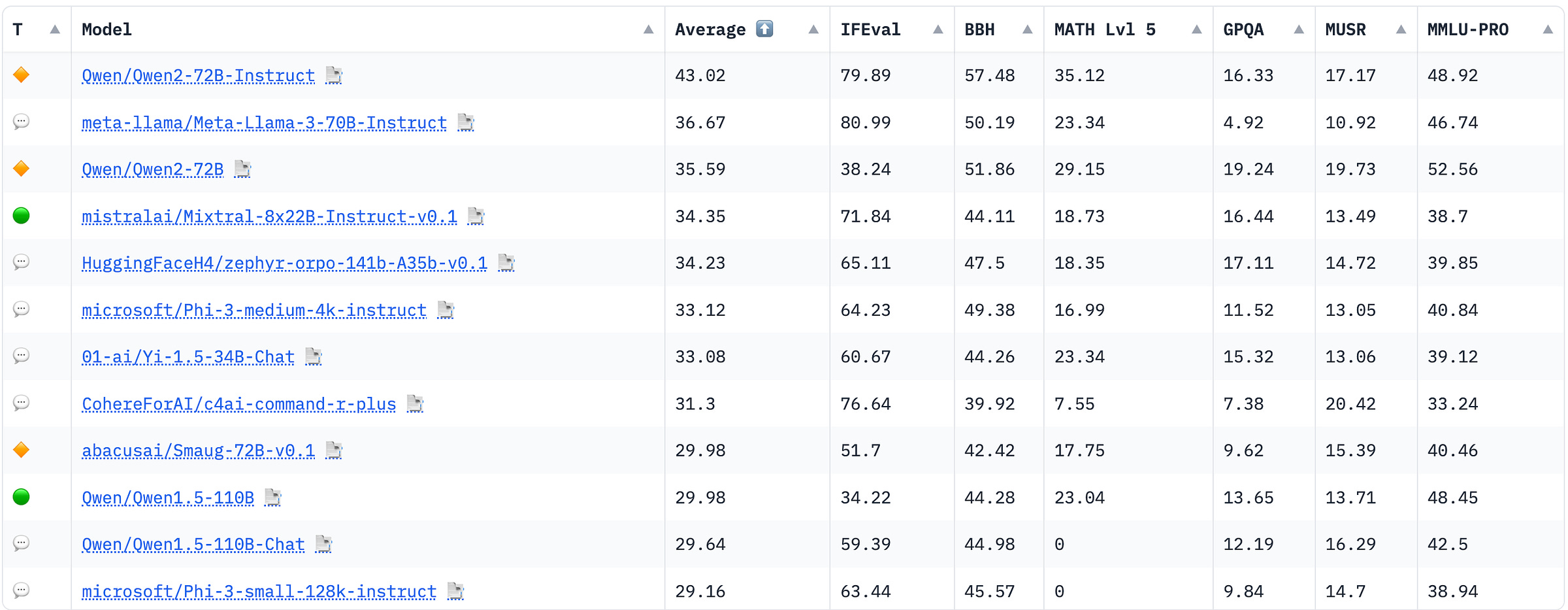

The standard industry benchmarks have become saturated and LLM performance has reached a plateau on these benchmarks. The models are now reaching baseline human performance. Further, there is a possibility of the LLMs being trained on benchmark data leading to overfitting.

This has hampered tracking real progress in the field and there’s a need to change the evaluations. Hugging Face has released Open LLM Leaderboard v2 with a suite of new benchmarks, a fairer scoring system, and features to make the leaderboard more relevant and responsive to the needs of the AI community.

Key Highlights:

New Benchmarks - Six new benchmarks have been introduced covering areas like knowledge testing, complex reasoning, mathematical abilities, and instruction following for fair evaluation. For example, GPQA, a new knowledge benchmark, uses questions designed by PhD-level experts.

Fairness and Reproducibility - The leaderboard now uses normalized scores, putting all benchmarks on an equal footing regardless of their inherent difficulty. The underlying evaluation suite has also been updated to minimize variability in results.

Community-Driven - The new leaderboard introduces several features to highlight and prioritize models that are most beneficial to users. The “Maintainer’s Highlight” category features a curated list of high-quality LLMs from various sources, a voting system allows users to directly influence which models are prioritized for evaluation.

Quick Bites 🤌

Meta has started rolling out the Llama 3-405B model (preview) in Meta AI in the latest WhatsApp update to limited users. The screenshot shows that users will be able to select the model they want to interact with. Llama 3-70B will be the default model, and users can select the 405B model for more complex prompts with a usage cap.

Amazon is hiring David Luan, CEO and co-founder of AI startup Adept, along with several of Adept’s co-founders and employees to strengthen its AGI team. Amazon will also license some of Adept’s technology to accelerate its development of digital agents that can automate software workflows. (Source)

It seems OpenAI has to speed up its sprint of partnerships with media houses. The Center for Investigative Reporting (CIR), publisher of Mother Jones and Reveal, has filed a lawsuit against Microsoft and OpenAI for copyright infringement, alleging that the companies used their content without permission or compensation to train their AI models. (Source)

Apple is reportedly bringing Apple Intelligence to VisionOS to power advanced AI features and the Siri overhaul in Vision Pro headsets. However, the release won’t be happening this year. (Source)

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

aiOS by Hyperspace: The world’s largest peer-to-peer network for AI agents. It lets you run open-source models like Llama 3 and Mistral 7B on a decentralized peer-to-peer network, leveraging other users’ computers for free.

Here's how you can do it in 4 simple steps:

Step 1 - Go to the aiOS websiteStep 2 - Simply install the client app on your laptop

Step 3 - Open the app on your laptop

Step 4 - Install “Llama-3” from AI models or use the model running on someone else’s machine.

MentatBot: A GitHub-native coding agent to automatically generate and review pull requests from GitHub issues. It uses leading AI models from OpenAI, Anthropic, and others to select relevant context, create new branches, make commits, and provide instant reviews. It achieved 38.3% on SWE-Bench Lite, beating Devin.

Pinokio: Install and control any AI application with one click, no need for complex setups like pip or Conda. It acts as an autonomous virtual computer, automating workflows and allowing you to create and share AI-driven applications using simple JSON scripts easily.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

The day OpenAI releases its Voice Engine, 99% of voice actors’ jobs will be done. ~

Ashutosh ShrivastavaThree interesting facts:

- The brain evolved for vision, but turned out to be good for language.

- Vision is reverse graphics.

- GPUs were designed for graphics, but turned out to be good for language. ~

Pedro Domingos

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: We curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

Reply