- unwind ai

- Posts

- Last Week in AI - A Weekly Unwind

Last Week in AI - A Weekly Unwind

From 7-April-2024 to 12-April-2024

It was yet another thrilling week in the AI field with advancements that further extend the limits of what can be achieved with AI.

Here are 10 AI breakthroughs that you can’t afford to miss 🧵👇

Resemble AI has rolled out Rapid Voice Cloning which makes creating high-quality voice clones a matter of seconds. By reducing the need for long audio samples to a mere 10 seconds, this technology makes voice cloning more accessible to a wider range of users. It can retain accents and subtle nuances in the voice with impressive accuracy.

To address the problem of a limited pool of training data, OpenAI, without permission, transcribed over a million hours of YouTube videos using its Whisper audio transcription model to train GPT-4. YouTube's CEO says that he “has no information” on whether OpenAI used YouTube data, and that downloading YouTube content like transcripts or video bits without authorization would violate YouTube’s terms of service.

Spotify has launched a new feature in beta that lets you create personalized playlists using AI, based on very simple text prompts. You can generate playlists based on a wide variety of prompts, including genres, moods, activities, characters, colors, or even emojis. You can even refine these playlists by giving commands in simple language. It will initially be available only in the U.K. and Australia.

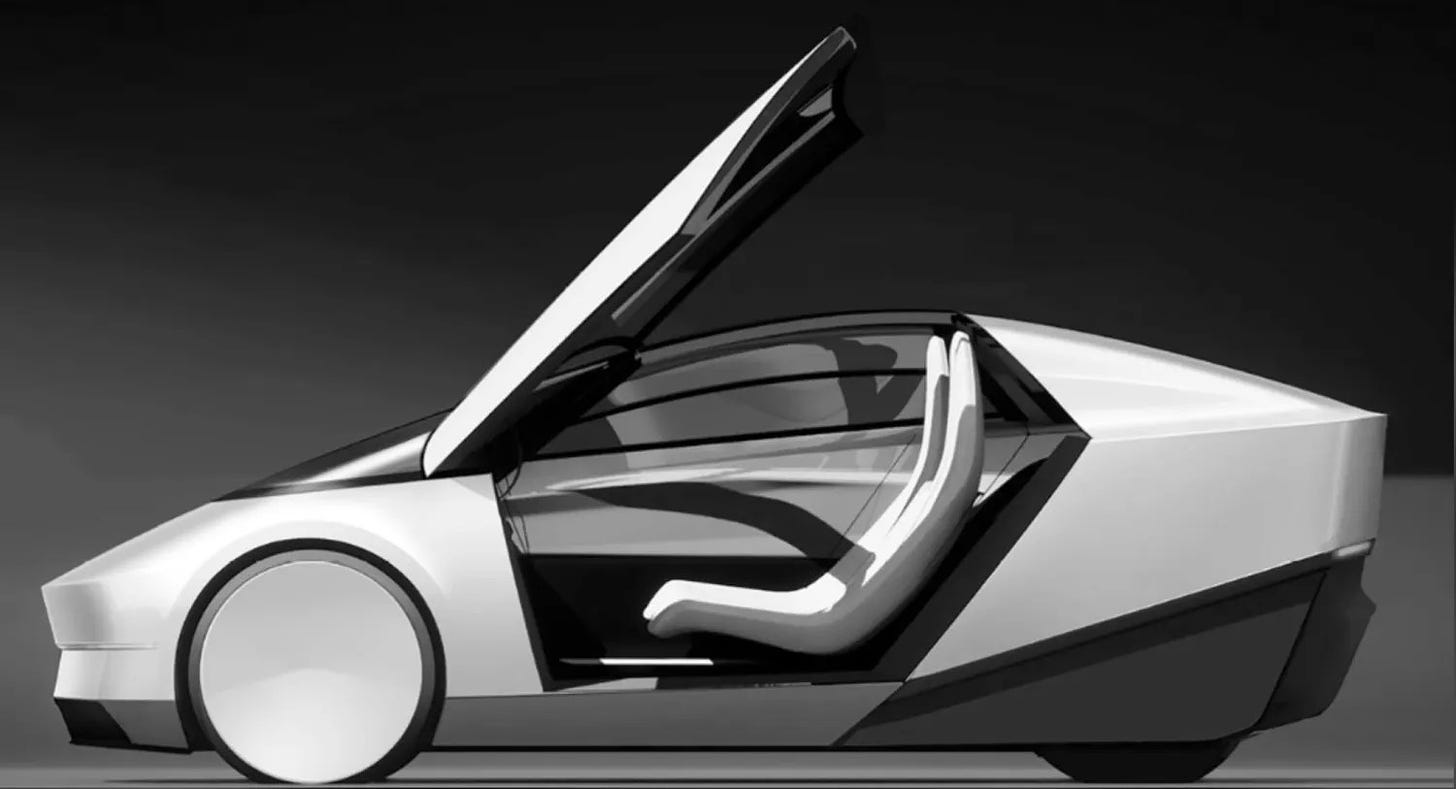

Tesla is gearing up to introduce its new “Robotaxi” on August 8th, with Elon Musk positioning it as the next big thing after the Cybertruck. Designed without pedals or a steering wheel, this vehicle is built from scratch for autonomous driving, offering a glimpse into the future of transportation.

Tesla has even indicated that every consumer vehicle they’ve made since 2016 could potentially become a Robotaxi with just a software update. It’s even said that the design of the Tesla Robotaxi will take after the rugged, futuristic style of the Cybertruck.

At the Google Cloud Next Event 2024, Google announced a heap of AI developments. Some of them were: Gemini 1.5 Pro is now generally available and can process audio inputs also; Gemma suite of models has three new models: for coding, research, and a light and improved 1.1B model; Google’s text-to-image model Imagen 2.0 can now generate short, 4-second live images from text prompts; Google Vids is a new AI-powered video creation app in Google Workspace for storytelling in work that lets you generate storyboards, edit them, and assemble videos with stock footage, images, background music, and voiceovers.

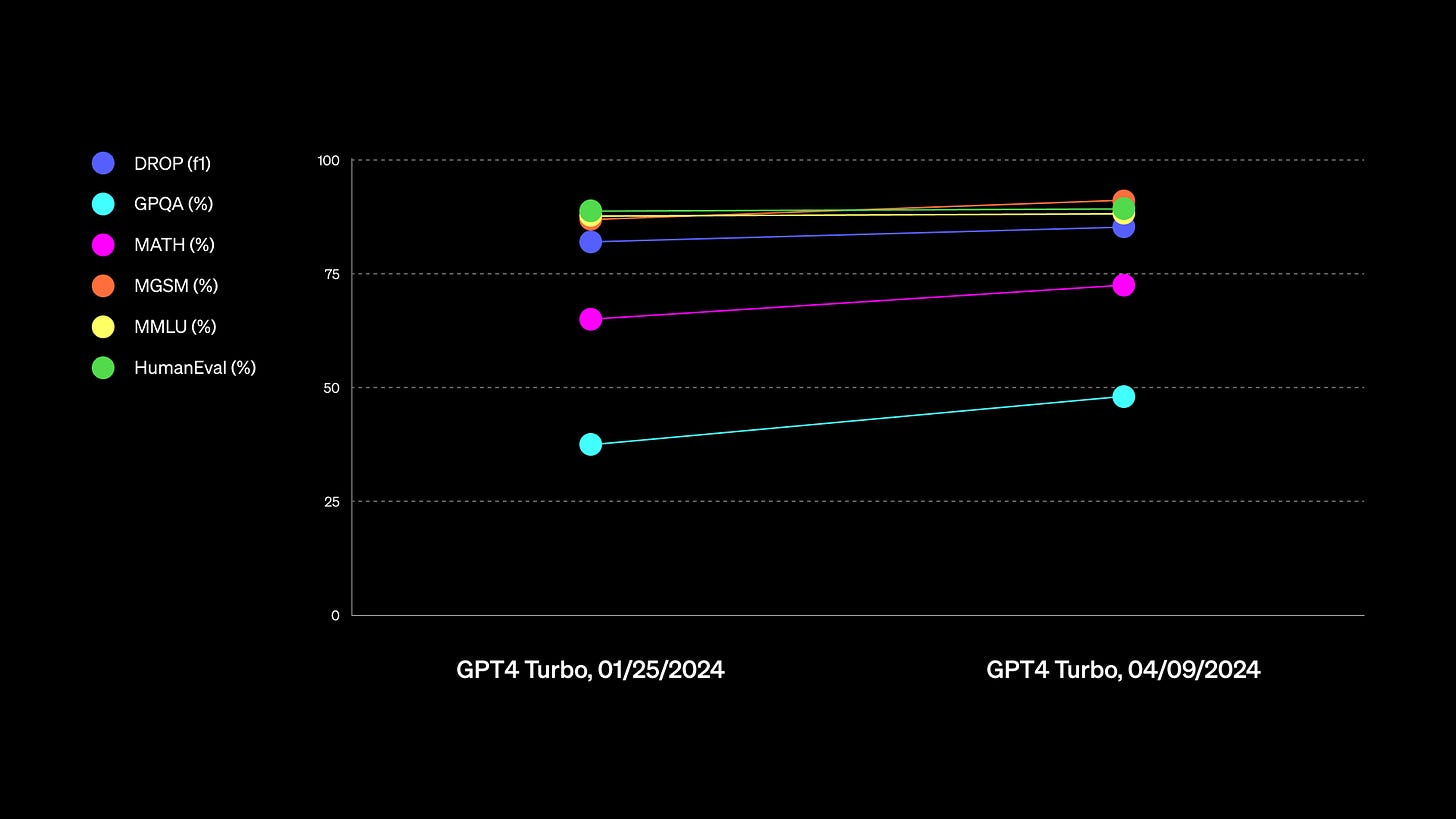

OpenAI has rolled out updates to GPT-4 Turbo model, now having vision capability, and significant performance improvement. This latest version of GPT-4 Turbo is available in ChatGPT for its paid users and via API. ChatGPT’s responses will be more direct, less verbose, and use more conversational language. The model will also enhance ChatGPT’s mathematical calculations, logical reasoning, and coding abilities.

Meta has confirmed at an event that it plans to launch Llama 3 within the next month, marking the next phase in its opensource language foundation model development. Nick Clegg, Meta’s president of global affairs, said that Llama 3 suite of models will be a number of different models with different capabilities and versatilities which will be released during the course of this year, starting very soon.

It was also reported that the launch will start with Meta releasing two smaller versions of Llama 3 first, probably 7B and 13B sized, but Meta hasn’t confirmed this yet.

Meta has unveiled the next generation of its custom-designed AI chip, the Meta Training and Inference Accelerator (MTIA) v2. This powerful chip is specifically built to handle the demands of Meta’s AI workloads, particularly the ranking and recommendation models that power user experiences across its platforms. MTIA v2 boasts significant performance improvements over its predecessor, offering greater efficiency and capabilities to support Meta’s growing AI ambitions.

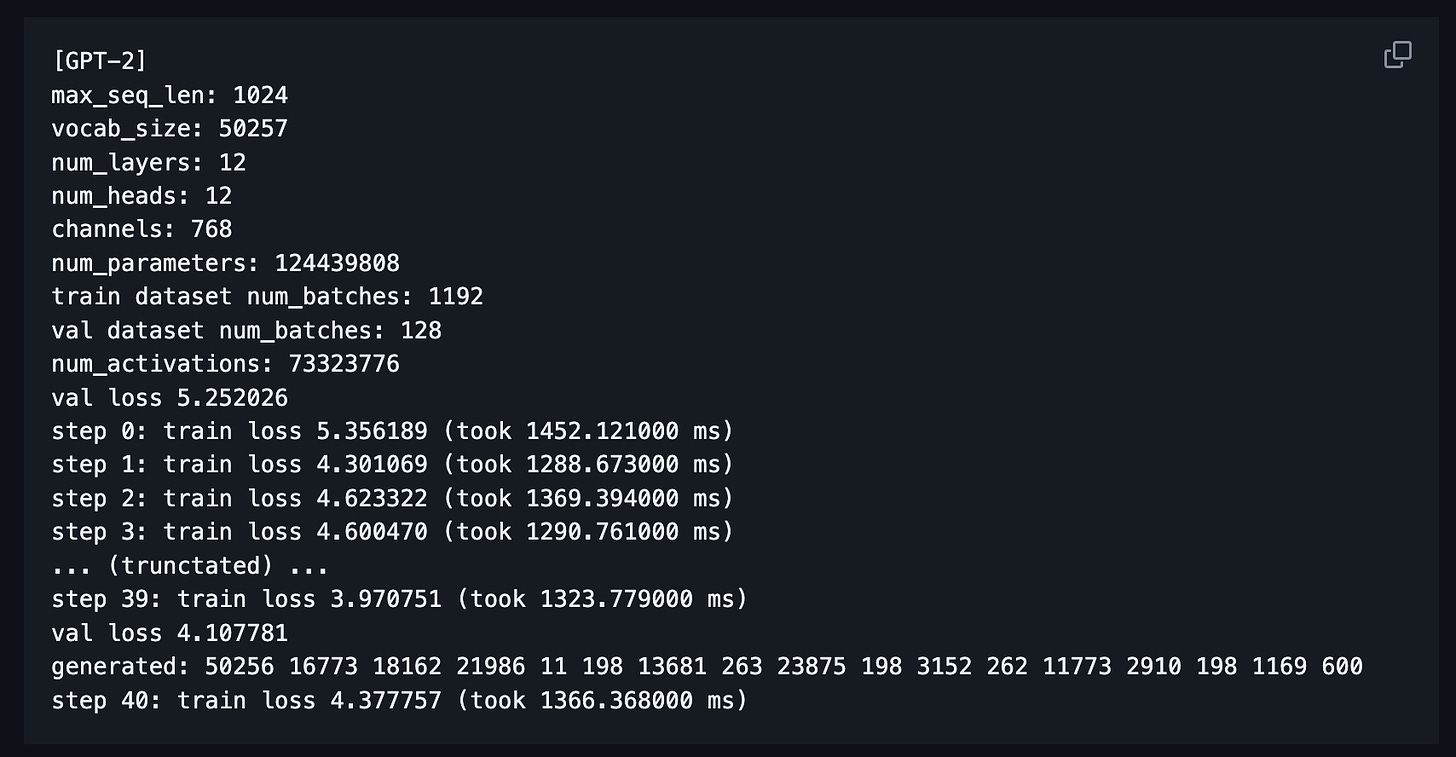

Andrej Karpathy’s new project called “llm.c” allows you to train LLMs from scratch using C programming and CUDA, bypassing the need for heavy software like PyTorch. This innovative approach is encapsulated in a single C file, enabling a lightweight and efficient training process. The project starts with training a GPT-2 model, utilizing just about 1,000 lines of code to demonstrate the ease of training LLMs. Additionally, llm.c supports customization, allowing users to train models on their own datasets to meet specific needs.

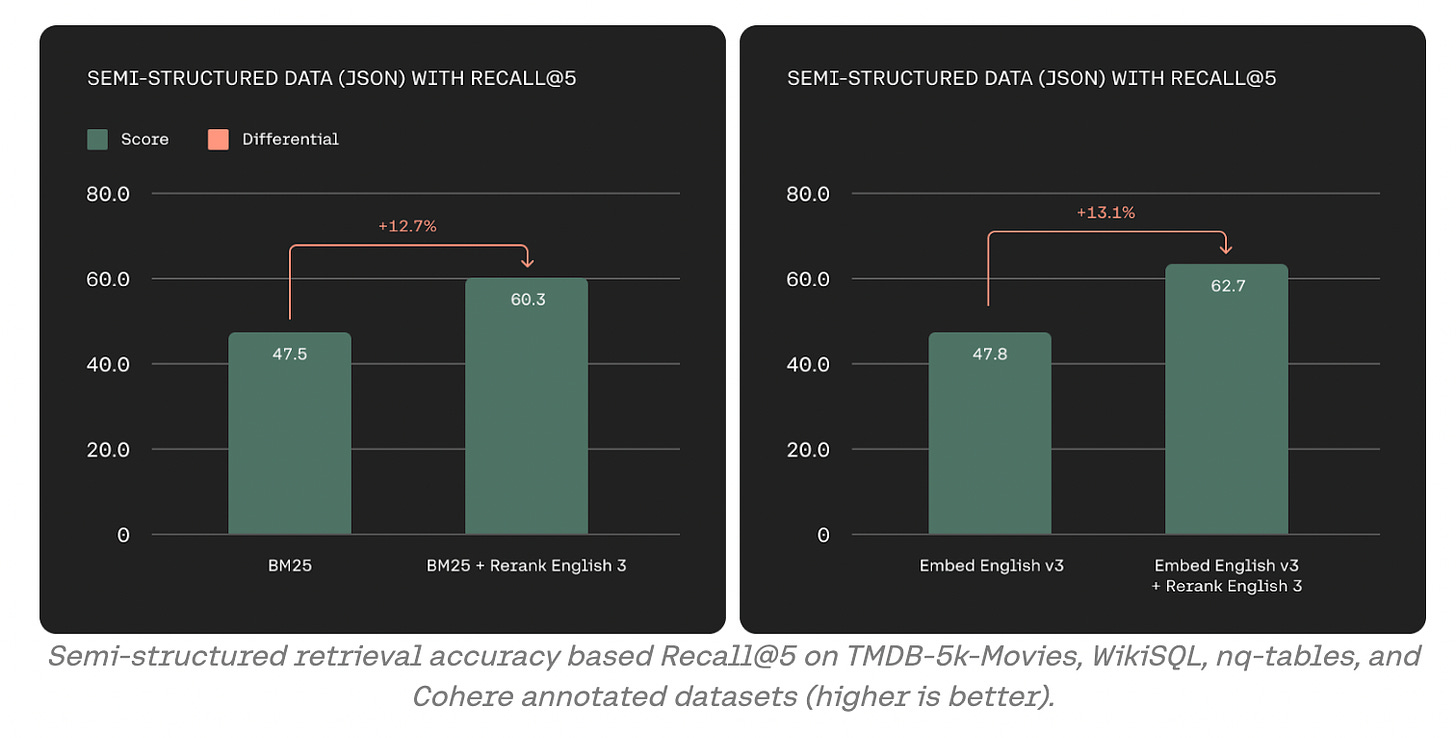

Cohere has released Rerank 3, a model developed to enhance enterprise search capabilities and RAG systems, capable of integrating with current databases and search technologies. It can handle extended document lengths up to 4k and search various data formats, including emails and code, in over 100 languages, thus improving document understanding and search precision. The model also reduces latency by up to 3x for longer documents and significantly decreases operational costs for RAG applications.

Which of the above AI development you are most excited about and why?

Tell us in the comments below ⬇️

That’s all for today 👋

Stay tuned for another week of innovation and discovery as AI continues to evolve at a staggering pace. Don’t miss out on the developments – join us next week for more insights into the AI revolution!

Click on the subscribe button and be part of the future, today!

📣 Spread the Word: Think your friends and colleagues should be in the know? Click the ‘Share’ button and let them join this exciting adventure into the world of AI. Sharing knowledge is the first step towards innovation!

Reply