- unwind ai

- Posts

- Last Week in AI - A Weekly Unwind

Last Week in AI - A Weekly Unwind

From 21-July-2024 to 27-July-2024

It was yet another thrilling week in the AI field with advancements that further extend the limits of what can be achieved with AI.

Here are 10 AI breakthroughs that you can’t afford to miss 🧵👇

OpenAI has announced that it is prototyping “SearchGPT,” a new search feature that combines AI with the web to give you fast and timely answers with clear and relevant sources. SearchGPT will give you synthesized AI-generated answers to your query, based on real-time information from the web. It combines search with conversation abilities, so you can ask follow-up questions to refine your results or go in depth.

It’s been rolled out to a small group of users for feedback, you can apply for the waitlist here.

Google DeepMind’s AI models, AlphaProof and AlphaGeometry 2, solved four out of six problems from this year’s International Mathematical Olympiad (IMO), achieving the same level as a silver medalist in the competition for the first time.

AlphaProof excels in algebra and number theory, while AlphaGeometry 2 focuses on geometry. AI progress in math reasoning can aid mathematicians in discovering new insights and solutions.

Google’s new opensource project Oscar simplifies open-source software development by introducing AI agents to ease the workload of maintaining these projects. The project leverages LLMs not to replace coding, but to handle tedious maintenance tasks like processing issues and connecting questions to relevant documentation.

These agents excel at tasks like processing incoming issues, linking them to existing resources, and even identifying duplicate reports. This approach reduces the need for maintainers to constantly monitor and triage new submissions.

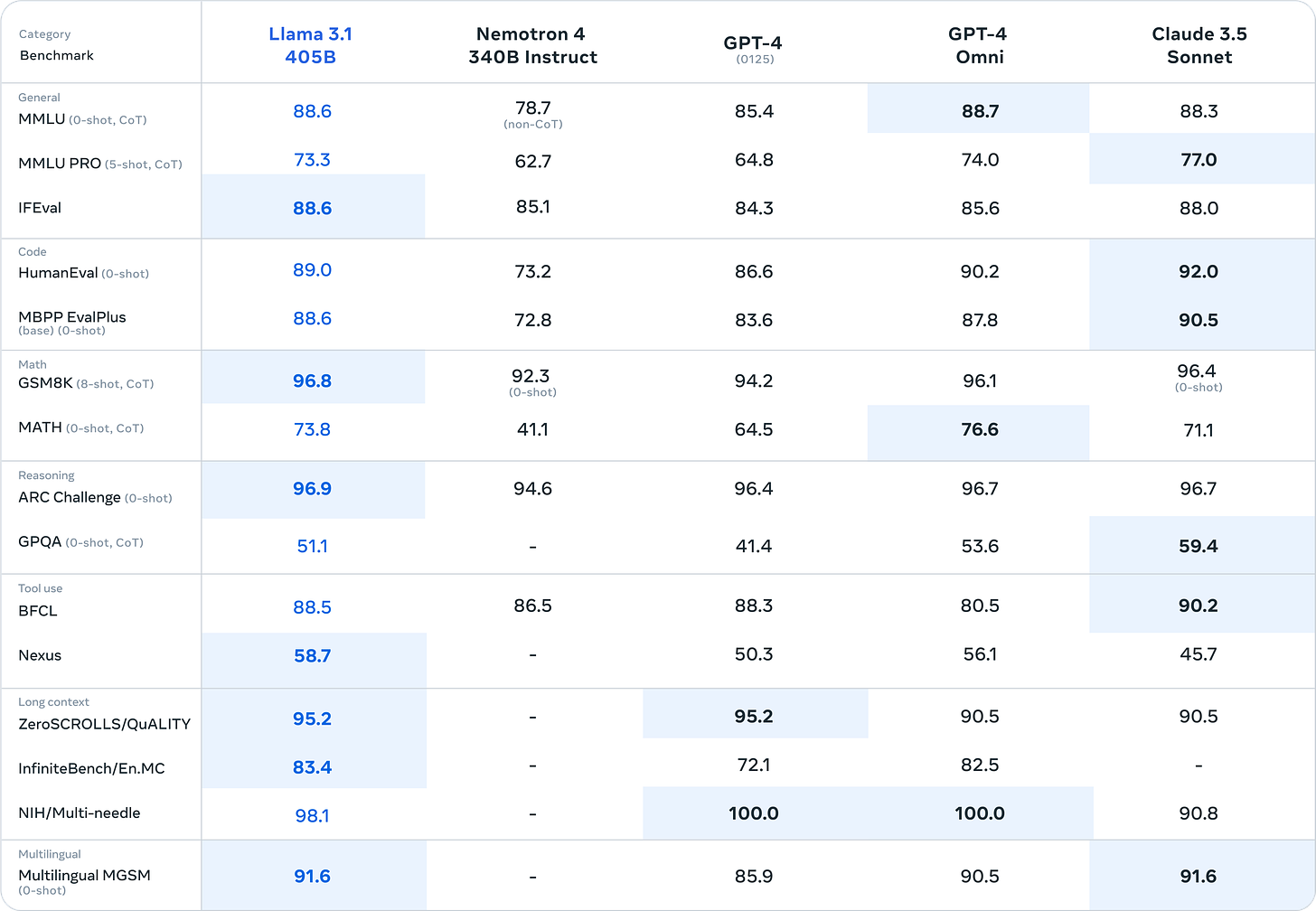

Meta released its new series of Llama models, Llama 3.1, including the much-anticipated Llama 3.1 405B model. This is the first frontier-level, opensource AI model with state-of-the-art capabilities, competing with the best closed-source models. This new family of models also includes upgraded versions of the 8B and 70B models.

All Llama 3.1 models are multilingual, have a longer context window of 128K tokens, state-of-the-art tool use, and overall stronger reasoning capabilities. Llama 3.1 405B competes strongly with the leading closed model GPT-4o and Claude 3.5 Sonnet, even outperforming them in a few tasks like math and tool use. Llama 3.1 8B and 70B are the best in their class.

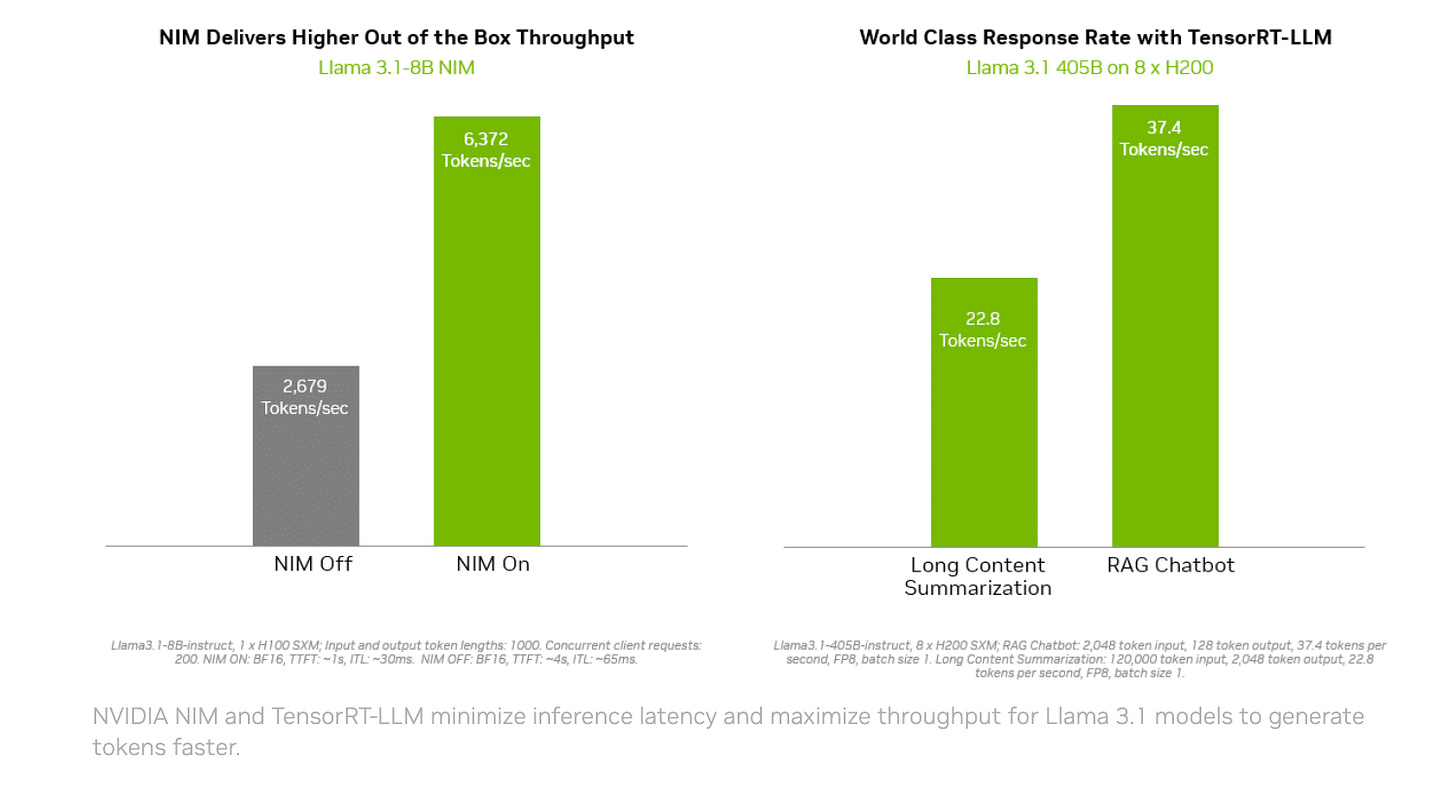

NVIDIA has introduced “AI Foundry,” a new platform for enterprises to build and deploy custom generative AI models using the new Meta Llama 3.1 models. This platform offers comprehensive tools for creating domain-specific models, fine-tuning with proprietary or synthetic data, and deploying them using NVIDIA’s optimized infrastructure. Along with this, NVIDIA has also released NeMo Retriever microservices to enhance RAG pipelines by improving response accuracy.

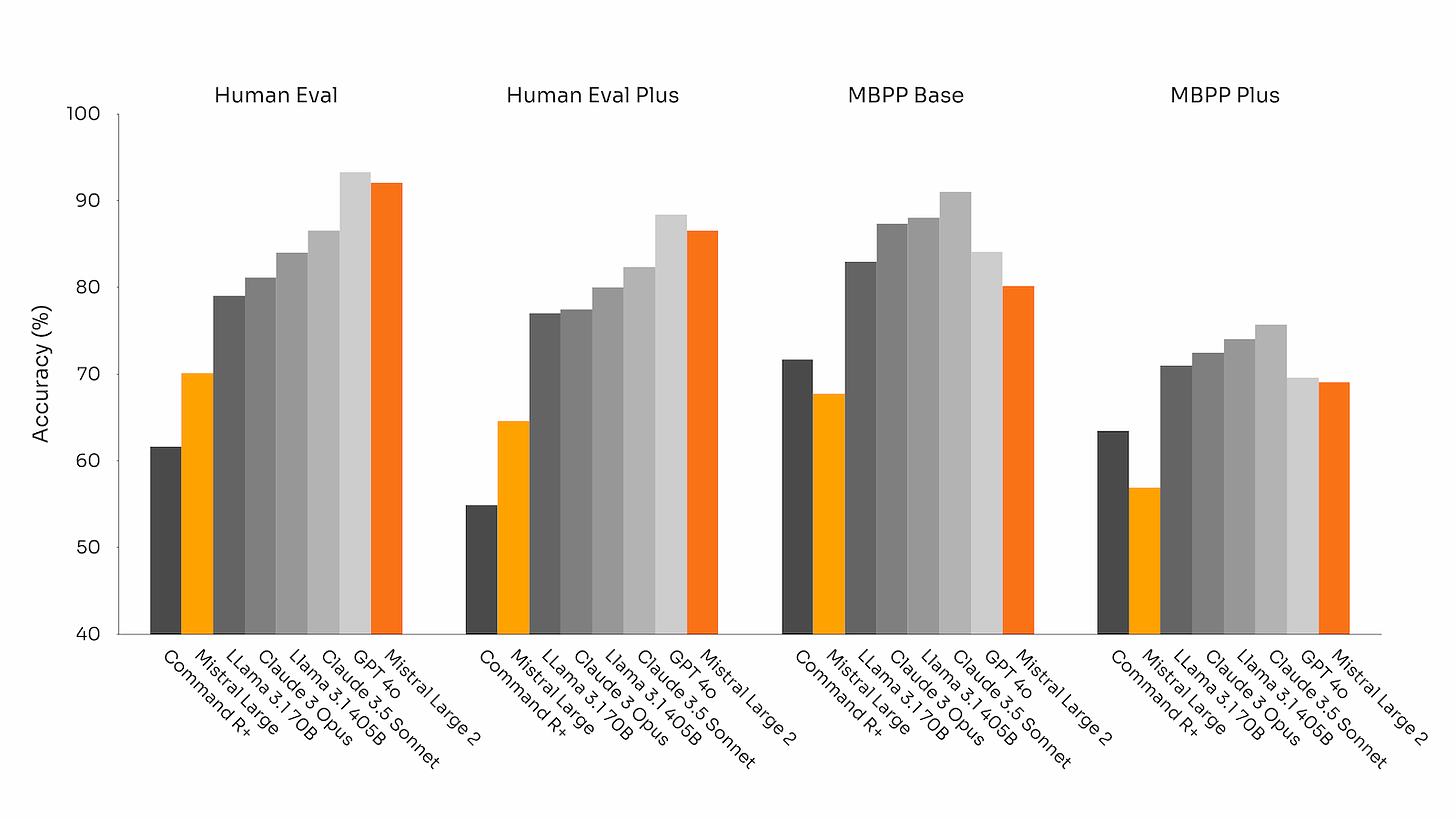

Mistral AI released their new flagship model, Mistral Large 2 with 123B parameters. This model surpasses its predecessor in code generation, mathematics, and reasoning, boasting stronger multilingual support and advanced function calling. Mistral Large 2 is designed for single-node inference with a 128k context window. Notable, it achieves 84% on MMLU, setting a new standard in terms of performance/cost of serving.

Mistral Large 2 is trained on a very large proportion of code. It outperforms much larger models including CLaude 3.5 Sonnet and Llama 3.1 405B, and matches the performance of GPT-4o on HumanEval and MultiPL-E benchmarks.

Cohere has released Rerank 3 Nimble, its newest foundation model in the Cohere Rerank model series, to enhance enterprise search and RAG systems. This model is 3x faster than its predecessor, Rerank 3, while maintaining a high level of accuracy, making it ideal for high-volume applications.

Rerank 3 Nimble reranks very long documents and processes multi-aspect and semi-structured data for user queries. By integrating Rerank 3 Nimble into RAG systems, developers can ensure that only the most relevant documents are passed to the generative language model. This results in more focused content generation and reduced computational costs.

LLMs rely on fixed training data which limits their ability to access real-time information, leading to inaccurate or outdated responses. RAG addresses this issue by incorporating up-to-date information from external sources but it doesn’t fully solve the problem due to challenges in managing diverse and multimodal knowledge bases.

To address this, Microsoft has introduced a unified database system that can manage and query various data types, including text, images, and videos. This database system seamlessly handles both vector data (representing semantic relationships) and scalar data (traditional structured data), improving information retrieval speed and accuracy for LLMs. The system is opensourced.

Together AI is launching its new inference stack, Together Inference Engine 2.0 (V2). This engine offers significantly faster decoding speeds than opensource vLLM and outperforms alternatives like Amazon Bedrock and Azure AI. Further, Together AI is also releasing Together Turbo and Together Lite endpoints, starting with Meta Llama 3, to provide a range of performance, quality, and pricing options.

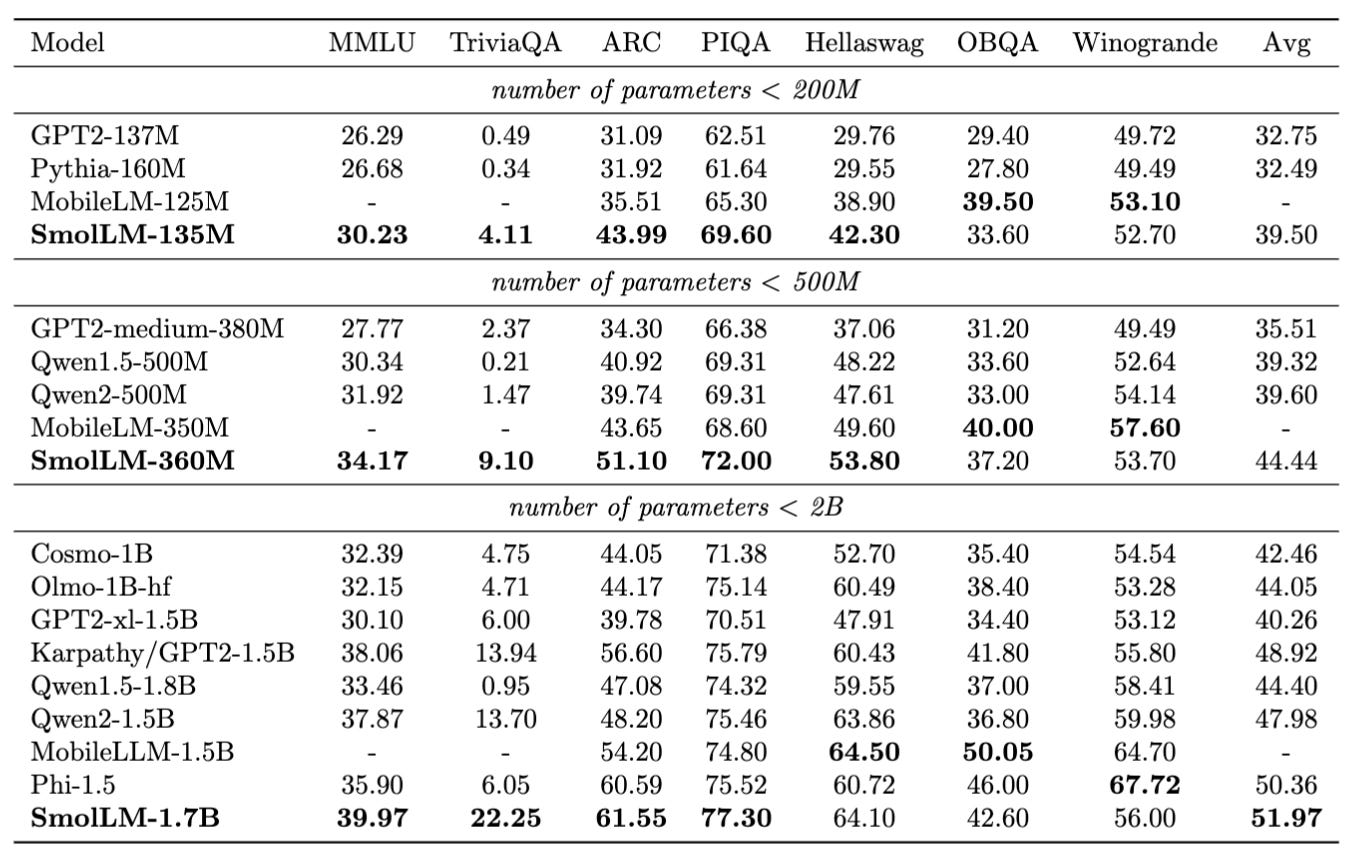

Hugging Face has introduced SmolLM, a new series of small language models (SLMs) designed for performance and efficiency. The SmolLM family comes in three sizes: 135M, 360M, and 1.7B parameters, each excelling in benchmarks against similar-sized models. These models are trained on SmolLM-Corpus, a meticulously crafted dataset also released by Hugging Face. SmolLM is designed to run locally, even on devices like smartphones, making it highly accessible for various applications.

Which of the above AI development you are most excited about and why?

Tell us in the comments below ⬇️

That’s all for today 👋

Stay tuned for another week of innovation and discovery as AI continues to evolve at a staggering pace. Don’t miss out on the developments – join us next week for more insights into the AI revolution!

Click on the subscribe button and be part of the future, today!

📣 Spread the Word: Think your friends and colleagues should be in the know? Click the ‘Share’ button and let them join this exciting adventure into the world of AI. Sharing knowledge is the first step towards innovation!

🔗 Stay Connected: Follow us for updates, sneak peeks, and more. Your journey into the future of AI starts here!

Reply