- unwind ai

- Posts

- Last Week in AI - A Weekly Unwind

Last Week in AI - A Weekly Unwind

From: 18-Dec-2023 to 24-Dec-2023

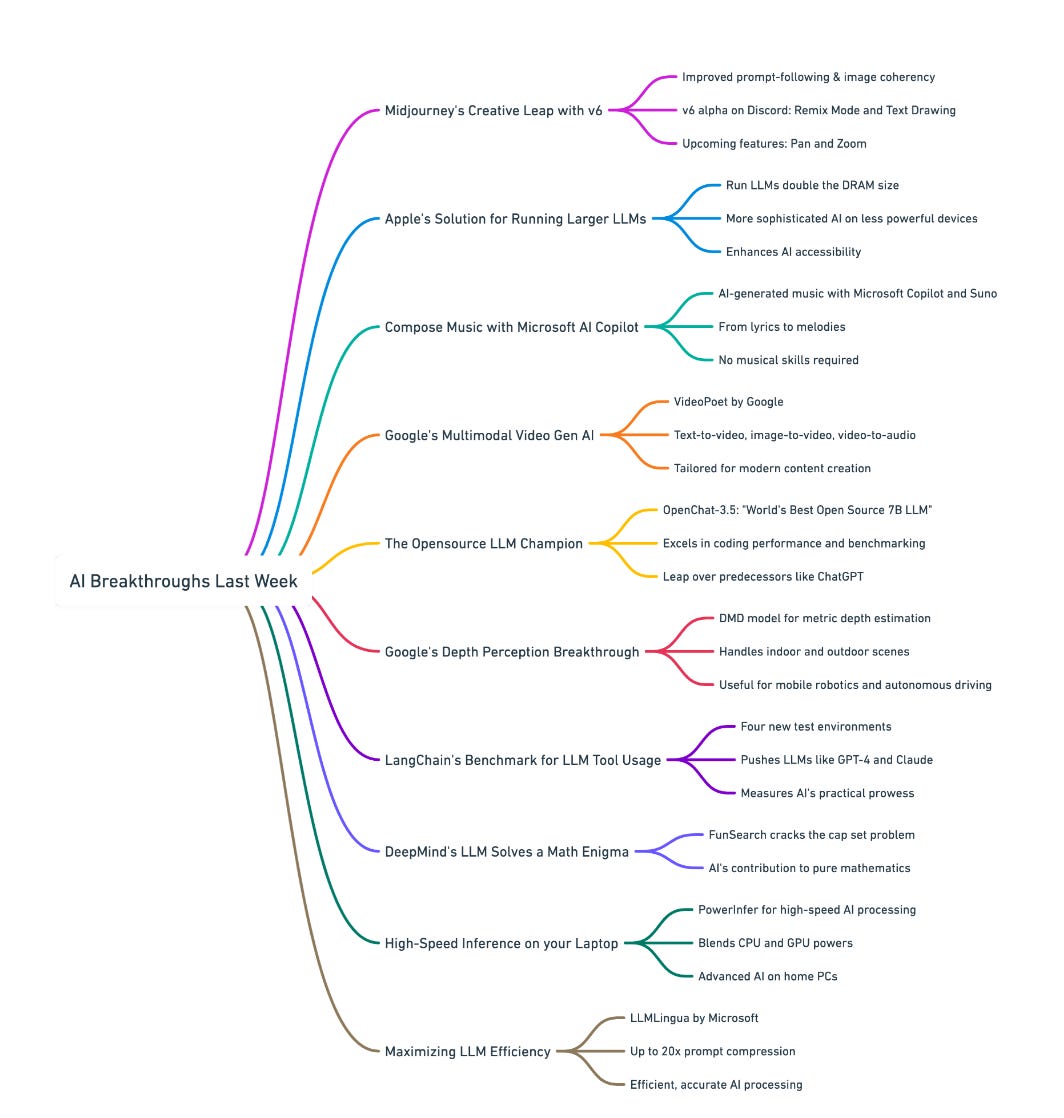

This past week has been another exciting one for the field of Artificial Intelligence, showcasing leaps in technology that continue to push the boundaries of what's possible. Here are 10 AI breakthroughs that you can't afford to miss 🧵👇

Midjourney's Creative Leap with v6

Midjourney has announced the alpha release of Midjourney v6, currently available on Discord. The v6 model improves significantly in how it follows prompts, even with longer inputs. This means you can expect a more precise translation of your textual descriptions into images. The model's understanding has been refined, resulting in more coherent and knowledge-informed outputs.

Apple's Solution for Running Larger LLMs

LLMs have immense computational and memory requirements, posing challenges for devices with limited DRAM capacity. Researchers at Apple have proposed a technique that addresses this challenge in deploying advanced AI models, especially on resource-constrained devices. The technique allows models up to 2x the size of the device's DRAM capacity to be run, widening the accessibility of sophisticated AI technologies.

Compose Music with Microsoft AI Copilot

Microsoft Copilot now lets you generate music with AI using simple text prompts. Microsoft has partnered with Suno, a leading AI music generation company, to let users create music of various genres and styles including the lyrics, from text prompts.

You don't need to know how to sing, play an instrument, or read music. The combination of Microsoft Copilot and Suno will handle the complex parts of music creation. Simply visit the Copilot website, sign in to your Microsoft account, and enable the Suno plugin which is being rolled out already!

Google's Multimodal Video Gen AI

Producing coherent and dynamic large motions in video generation has been a longstanding challenge. Google has released VideoPoet which employs the prowess of LLMs for an array of video generation tasks. This innovative model is capable of text-to-video, image-to-video, and video-to-audio conversions, along with advanced techniques like video stylization, inpainting, and outpainting.

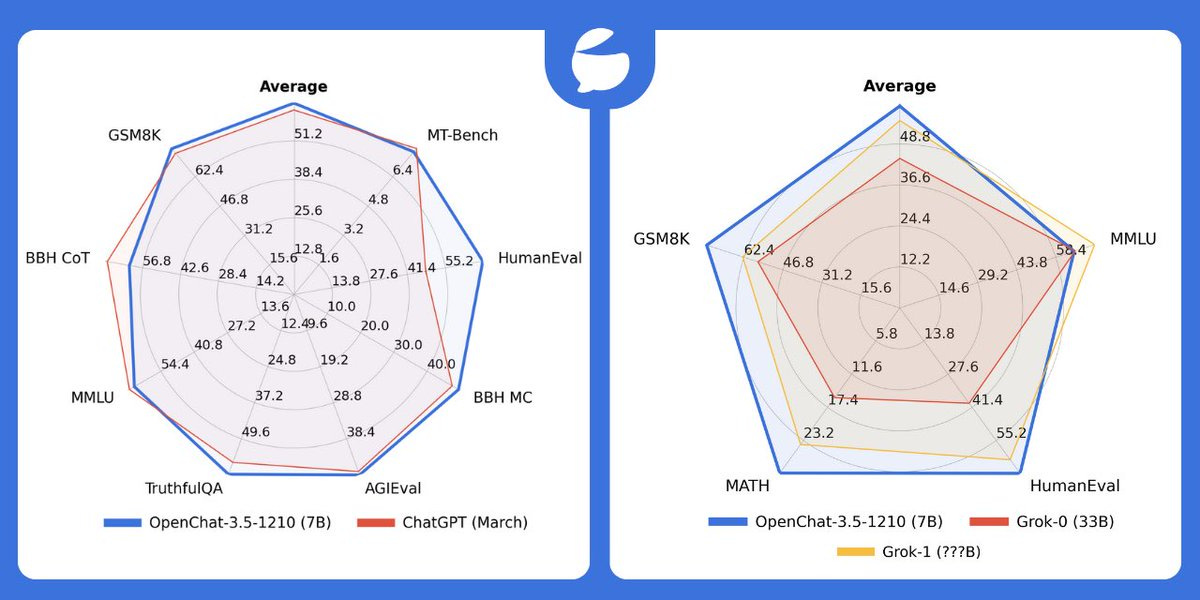

The Opensource LLM Champion

OneAI has released OpenChat-3.5-1210 is an upgrade to OpenChat-3.5, with a particular emphasis on enhancing coding performance. The model shows a near 15-point increase on the HumanEval benchmark, while also maintaining or improving performance on other benchmarks. Touted as the "World's Best Open Source 7B LLM", OpenChat-3.5-1210 surpasses ChatGPT and xAI’s Grok.

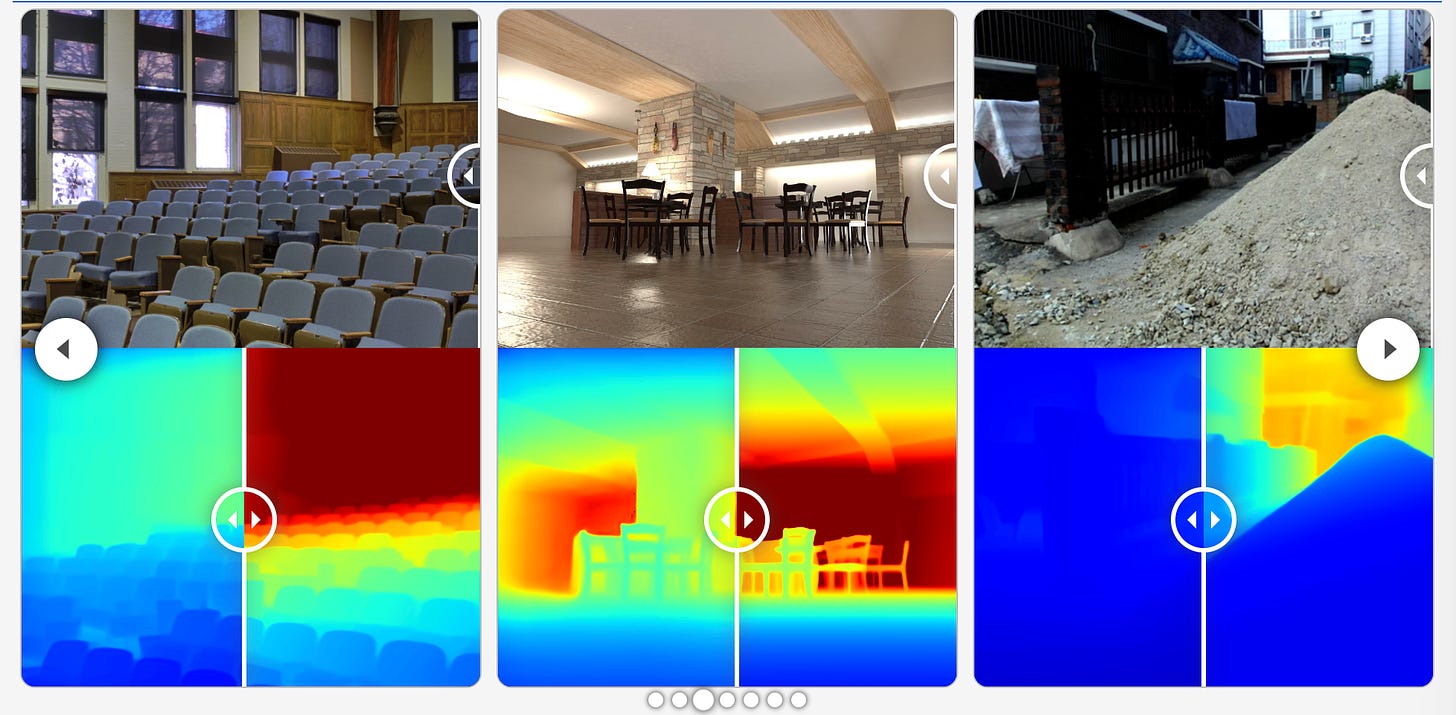

Google's Depth Perception Breakthrough

Zero-shot metric depth estimation is challenging because the colors and depth information vary a lot between indoor and outdoor scenes. Additionally, unknown camera intrinsics makes it hard to accurately gauge the size and distance of objects. Google has released DMD (Diffusion for Metric Depth) model to estimate monocular metric depth in various environments, a crucial aspect for applications like mobile robotics and autonomous driving.

LangChain's Benchmark for LLM Tool Usage

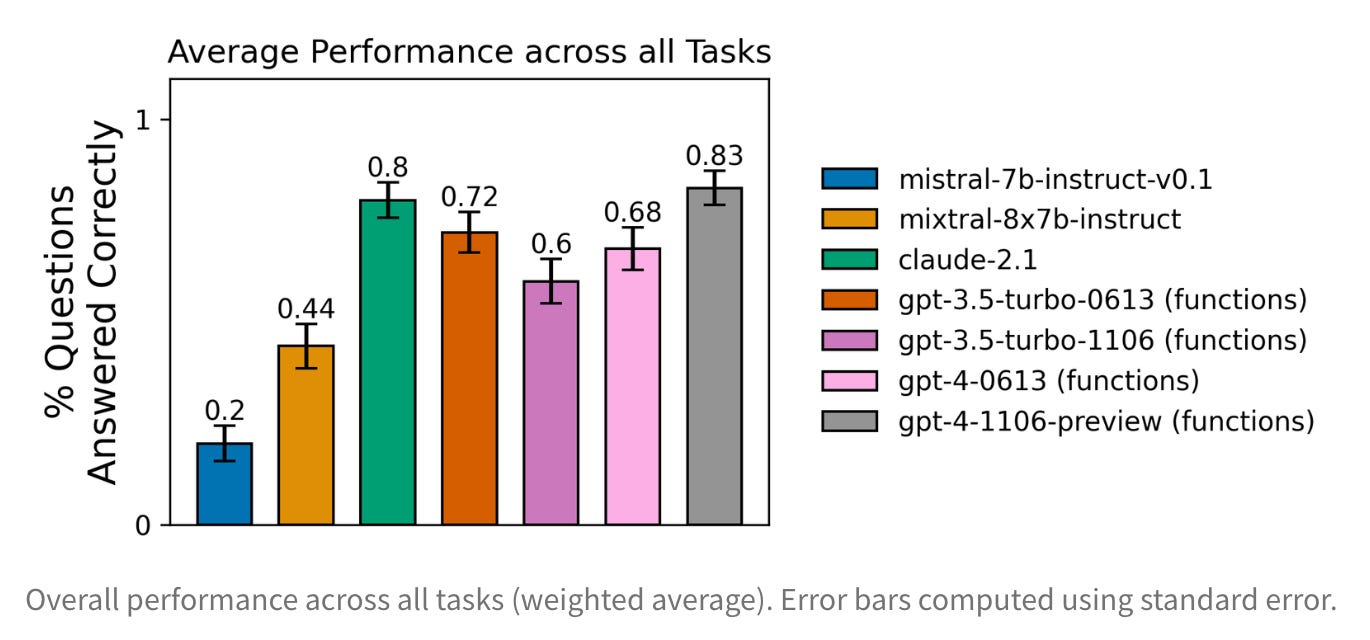

LangChain has released four new testing environments to benchmark the capabilities of various LLMs in tool usage tasks. It offers a structured framework to evaluate and compare the effectiveness of LLMs like GPT-4, GPT-3.5, Claude, and others in performing tasks that require tool use, function calling, and overcoming pre-trained biases.

DeepMind's LLM Solves a Math Enigma

Google DeepMind has successfully employed an LLM to solve an elusive problem in pure mathematics, marking the first instance where a language model has contributed a novel and verifiable solution to a complex scientific question, offering insights previously unknown in the field.

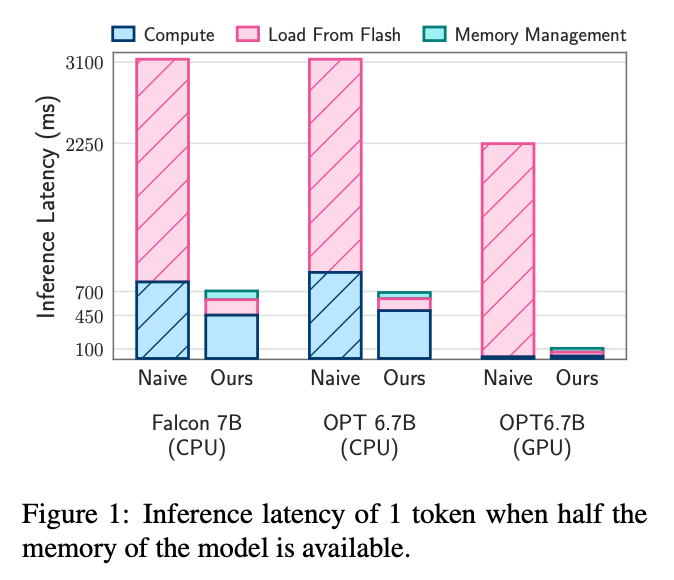

High-Speed Inference on your Laptop

PowerInfer, a new tool for running advanced language models that brings high-speed AI processing to everyday computers with standard GPUs. This tool cleverly combines CPU and GPU capabilities to handle complex language tasks more efficiently, making powerful AI models more accessible for personal use and research.

Maximizing LLM Efficiency: Less is More

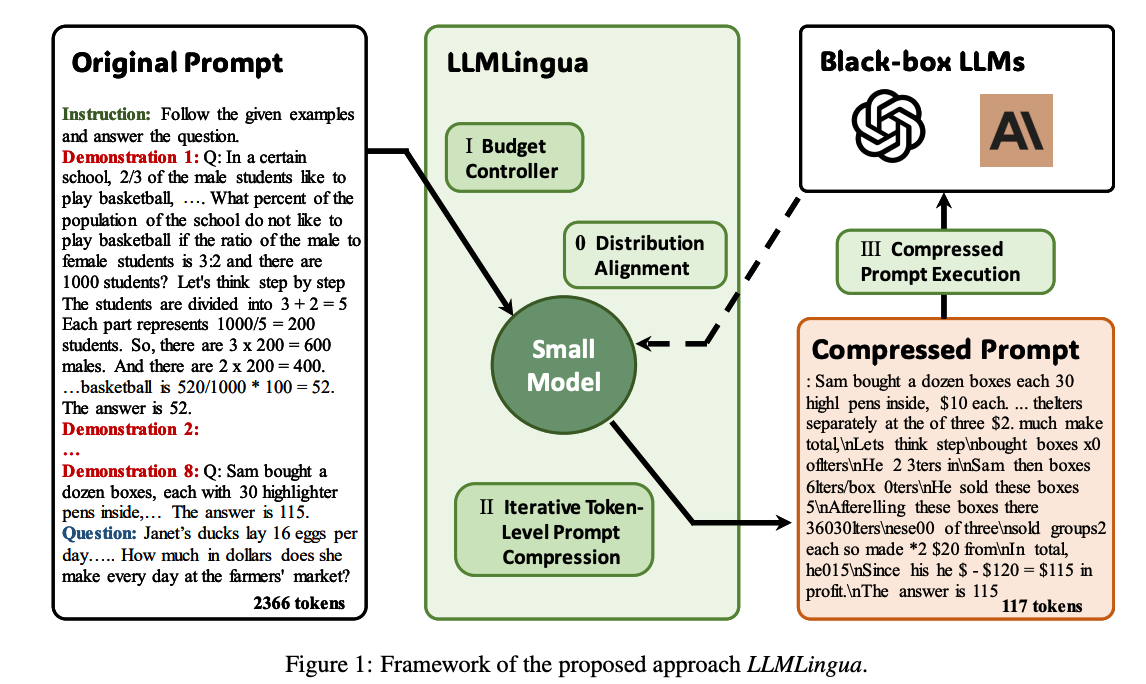

LLMs have been increasingly used in various domains, but their effectiveness is hindered by long prompts, leading to issues like increased API response latency, exceeded context window limits, loss of contextual information, expensive API costs, and performance issues. Researchers at Microsoft have introduced LLMLingua designed for prompt compression up to 20x, to streamline the processing of prompts in LLMs, focusing on enhancing efficiency and accuracy.

IMPORTANT 👇👇👇

Stay tuned for another week of innovation and discovery as AI continues to evolve at a staggering pace. Don’t miss out on the developments – join us next week for more insights into the AI revolution!

Click on the subscribe button and be part of the future, today!

📣 Spread the Word: Think your friends and colleagues should be in the know? Click the 'Share' button and let them join this exciting adventure into the world of AI. Sharing knowledge is the first step towards innovation!

Reply