- unwind ai

- Posts

- French startup bringing Voice AGI to life

French startup bringing Voice AGI to life

PLUS: Salesforce's function-calling model, Eleven Labs' Voice Isolator

Today’s top AI Highlights:

Kyutai unveils the first real-time Voice AI ever released, Moshi

Salesforce’s 7B model outperforms GPT-4 & Claude-3 Opus in function-calling

Eleven Labs releases Voice Isolator to extract speech from any audio

Google’s carbon footprint balloons in its Gemini AI era

Add code interpreting capabilities to your AI apps with this SDK

& so much more!

Read time: 3 mins

Latest Developments 🌍

Kyutai, a Paris-based AI research lab, unveiled their real-time voice AI called Moshi. Developed from scratch in just 6 months by a small team of eight, Moshi distinguishes itself as the first voice-enabled AI openly available for public testing and use. Moshi has a very realistic and emotionally nuanced voice, and can talk in 70 expressions. With a latency of ~160 ms, the conversations feel real-time and natural. Moshi’s code and model weights will be shared publicly soon.

Key Highlights:

Model and Training - The first step to train Moshi was to train a text-only LLM, called Helium, which is a 7B parameter model. It serves as the foundation for Moshi to have knowledge. Then the team performed some joint training on a mix of text and audio data, for the model to be able to process and generate audio.

Conversation Abilities - The model was fine-tuned on conversation data to make it understand when to start speaking, when to stop. The team relied on synthetically generated data where Helium generated 100K “oral-style” transcripts.

Voice Training - Moshi’s voice was synthesized using a separate Text-to-Speech (TTS) model, trained on 20 hours of audio from voice actor Alice. It can speak with various emotions, tones, and accents, including French.

Multistream Audio - Moshi can process and generate audio simultaneously. It can process two audio streams for real-time listening and responding. This helps with natural flow of conversations and ability to interrupt it while it’s speaking.

Open-source Plans - Kyutai plans to publicly release Moshi's codebase, 7B model, audio codec, and optimized stack.

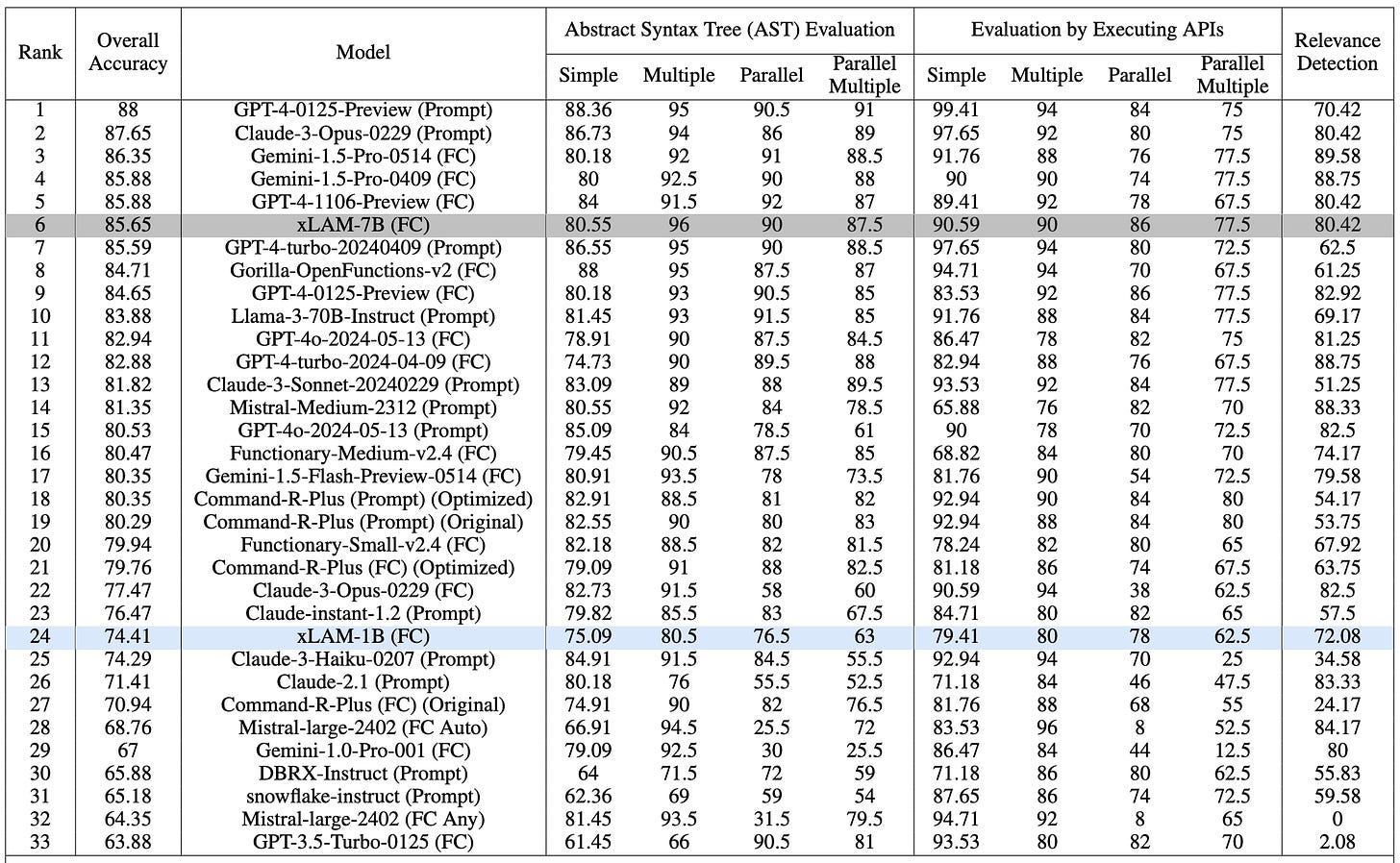

Salesforce has released two new language models, xLAM-7B and xLAM-1B, designed for “function calling.” They are particularly good at interacting with applications and services for retrieving information and completing tasks as instructed. These models stand out for their strong performance despite being smaller.

With only 7B parameters, the model achieved SOTA performance on the Berkeley Function-Calling Benchmark, outperforming multiple GPT-4 models. Even the 1B model surpassed GPT-3.5-Turbo and Claude-3 Haiku. The key lies in how they are trained.

Key Highlights:

APIGen Framework -

Salesforce introduces a framework to generate high-quality, diverse datasets specifically for training function-calling models.

APIGen collects real-world APIs from various sources, cleans and organizes them, and then generates thousands of different function-calling scenarios.

These scenarios include a mix of simple and complex tasks.

The resulting dataset was used to train the xLAM models, equipping them with real-world skills.

Small but Mighty - xLAM-7B ranked 6th on the Berkeley Function-Calling Benchmark (BFCL) leaderboard, outperforming previous versions of GPT-4, Llama 3-70B, and Claude-3 models. The smaller xLAM-1B also holds its own, outperforming GPT-3.5-Turbo and Claude-3 Haiku.

Excelling in Complex Tasks - Both models are adept at handling complex, multi-step scenarios in function calling. They can successfully execute a series of related commands, like those needed to book a trip, manage a calendar, or control smart home devices.

Quick Bites 🤌

Eleven Labs introduces Voice Isolator - Remove unwanted background noise and extract crystal clear dialogue from any audio to make your next podcast, interview, or film sound like it was recorded in the studio. It’s not currently optimized for music vocals.

Introducing Voice Isolator.

Remove unwanted background noise and extract crystal clear dialogue from any audio to make your next podcast, interview, or film sound like it was recorded in the studio.

Try it free: elevenlabs.io/voice-isolator…

— ElevenLabs (@elevenlabsio)

7:50 PM • Jul 3, 2024

Apple is seating its non-voting observer on OpenAI’s board of directors. Phil Schiller, the head of Apple’s App Store and its former marketing chief, will represent the company on OpenAI’s nonprofit board. This arrangement will give Apple a similar level of involvement as Microsoft. (Source)

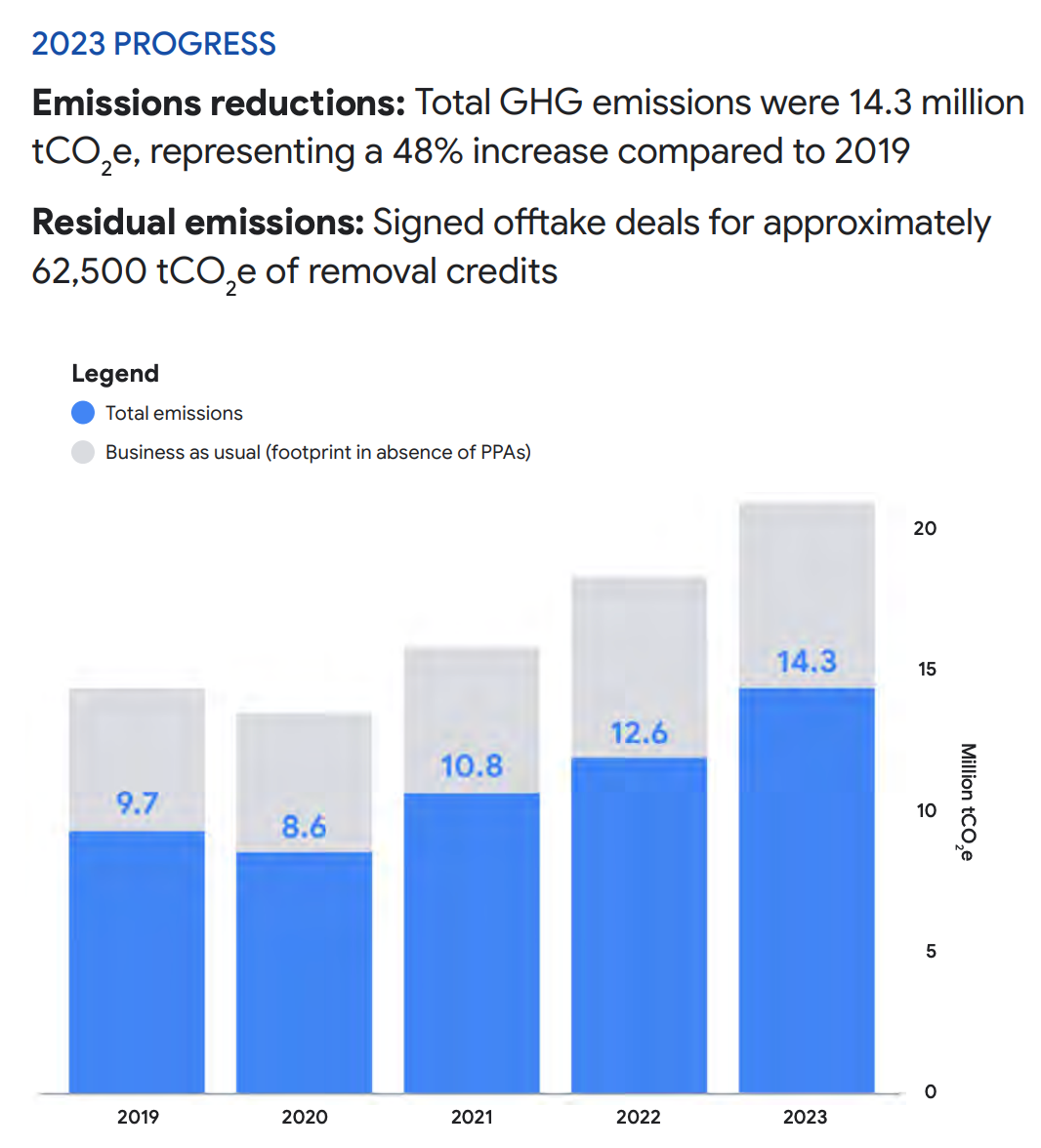

Google’s Gemini era is costing it its environmental goals. Google’s latest environmental report reveals a 48% increase in its greenhouse gas emissions since 2019, attributed to the growing energy demands of its data centers.

In 2023 alone, Google produced 14.3 million metric tons of carbon dioxide pollution, with electricity consumption from data centers accounting for a significant portion of this increase. (Source)

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

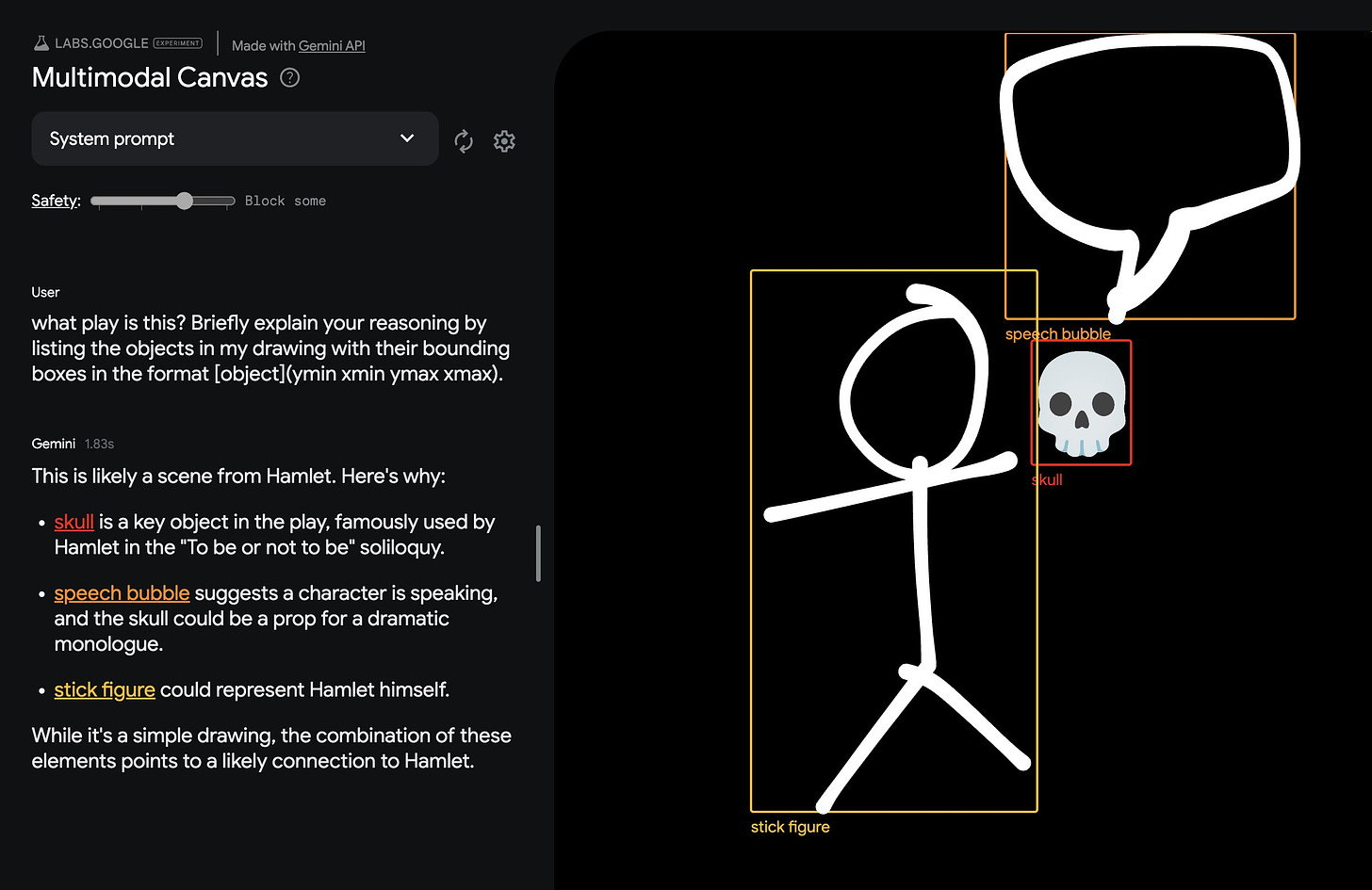

Multimodal Canvas: A digital canvas where you can mix words, drawings, photos, and even emojis to create prompts. Gemini 1.5 Flash tries to understand your combination of inputs and give you a creative response.

E2B’s Code Interpreter SDK: Add code interpreting to AI apps, running securely inside an open-source sandbox. It supports any LLM, streams content, and runs on serverless and edge functions using Python and JS SDKs.

AnythingLLM: A business intelligence tool that lets you use any LLM with any document, maintaining full control and privacy. Works on MacOS, Linux, and Windows, supports custom models, and can run fully offline on your machine.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

When was the "talk to your PDF" trend?

I'm now seeing dozens of "talk to your data" startups.* ~

YoheiI feel like I have to once again pull out this figure. These 32x32 texture patches were state of the art image generation in 2017 (7 years ago). What does it look like for Gen-3 and friends to look similarly silly 7 years from now.

Meme of the Day 🤡

You will get access to GPT-5 and Sora from OpenAI once they are approved by US govt after election.

— AshutoshShrivastava (@ai_for_success)

1:13 PM • Jul 3, 2024

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: We curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

Reply