- unwind ai

- Posts

- Claude 3.5 beats GPT-4o and Gemini Pro

Claude 3.5 beats GPT-4o and Gemini Pro

PLUS: Mixture-of-Agents enhances LLM performance, Comedians say AI isn't still funny, ChatGPT but with tables

Today’s top AI Highlights:

Anthropic releases Claude 3.5 Sonnet, sets new standards in AI performance

Together AI’s Mixture-of-Agents approach enhances LLM performance

What happened when 20 comedians got AI to write their routines

Get more done with AI, using tables instead of chat

& so much more!

Read time: 3 mins

Latest Developments 🌍

Anthropic has released Claude 3.5 Sonnet succeeding the Claude 3 models family which came out just 3 months back. Claude 3.5 Sonnet has a context window of 200k tokens, and improves upon both speed and intelligence from other leading AI models including Claude 3 Opus and GPT-4o.

Available for free on Claude.ai and the Claude iOS app, it is also available via API, Amazon Bedrock, and Google Cloud’s Vertex AI. That’s not it! The most impressive feature this time is the new Artifacts feature that allows you to interact with AI-generated content in a dynamic workspace.

Key Highlights:

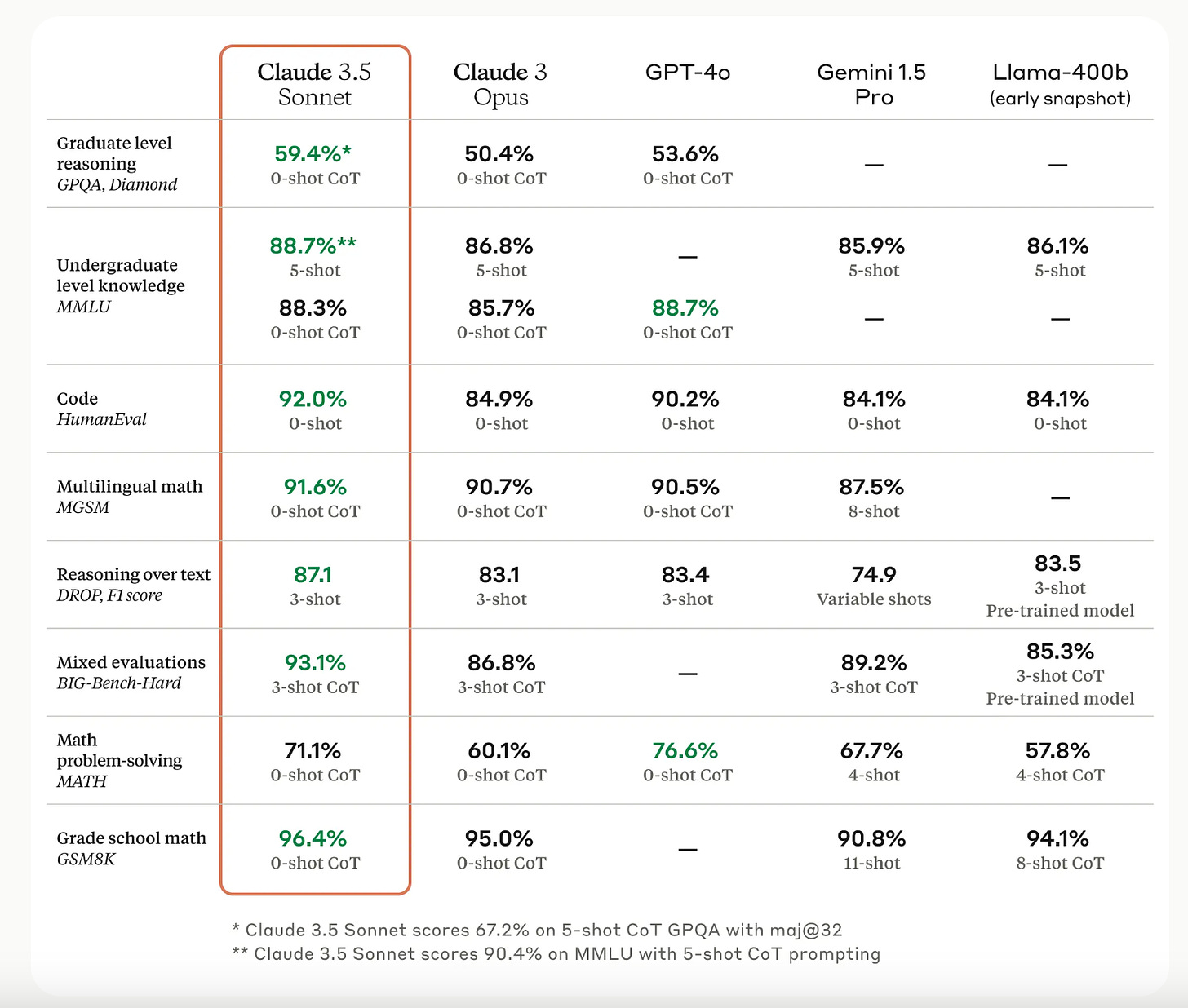

Superior Performance: Claude 3.5 Sonnet outperforms Claude 3 Opus, GPT-4o, Gemini 1.5 Pro, and Llama-3 400B (early snapshot results) in various benchmarks, including graduate-level reasoning (GPQA), MMLU, math (MGSM and GSM8K), and coding (HumanEval).

Speed and Cost: It operates twice as fast as Claude 3 Opus, making it ideal for complex and real-time tasks. Despite its speed and intelligence, it costs the same as Claude 3 Sonnet, at $3 per million input tokens and $15 per million output tokens.

Vision Capabilities: The model delivers state-of-the-art performance on standard vision benchmarks such as visual math reasoning (MathVista), chart understanding (ChartQA), and document understanding (DocVQA).

Agentic Coding: In an internal evaluation, Claude 3.5 Sonnet solved 64% of coding problems, significantly higher than Claude 3 Opus’s 38%. This makes it highly effective for tasks that require understanding and improving open-source codebases.

Artifacts Feature: When you ask Claude to generate content like code snippets, text documents, or website designs, these Artifacts appear in a dedicated window alongside your conversation. This is a dynamic workspace where you can see, edit, and build upon Claude’s creations in real-time.

Safety and Privacy: Claude 3.5 Sonnet is classified as an AI Safety Level 2 (ASL-2) model, indicating rigorous safety evaluations. UK and US AI Safety Institutes have tested it to ensure robust performance and adherence to safety standards.

LLMs are powerful but they can be expensive to train and scale, and often specialize in specific tasks. How can we combine the strengths of multiple LLMs to create a more robust and capable model? Together AI team introduced a new approach called Mixture-of-Agents (MoA). Imagine a team of LLMs working together, with each member learning from the others’ outputs and improving their responses. MoA uses this collaborative approach to create a system that can outperform individual LLMs on a range of tasks.

Key Highlights:

Collaborativeness in LLMs - LLMs often perform better when provided with responses from other models, even if those responses aren’t perfect. MoA uses this principle by having multiple LLMs share their outputs and learn from each other.

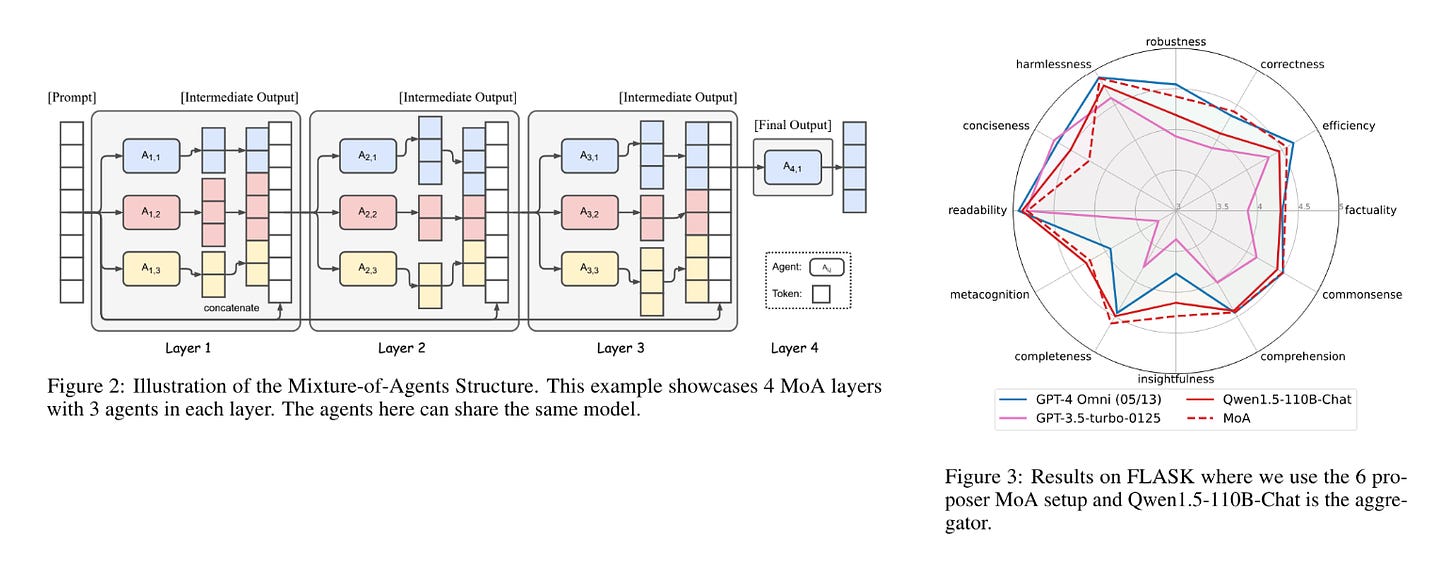

Layered architecture - Each layer in the model consists of multiple LLMs (“agents”). As the process moves from layer to layer, the LLMs use the outputs from the previous layer as additional information to improve their own responses. This iterative approach allows the model to refine its output through collaboration.

Impressive performance - In some cases, MoA using only open-source LLMs surpasses the performance of GPT-4o. MoA could be a cost-effective way to create high-performing LLMs without relying on expensive, closed-source models.

AI is great at a lot of tasks but what about humour? A study by Google DeepMind researchers explores the potential of LLMs in comedy writing, finding that while AI can assist with structural tasks, it struggles to produce genuinely humorous material. 20 professional comedians were tasked with using LLMs like ChatGPT to generate jokes, and the results were a mixed bag. While AI proved useful for overcoming writer’s block and structuring routines, the generated jokes were often described as bland, generic, and lacking in originality.

This lack of comedic spark is attributed to various factors, including the safety filters that prevent AI models from generating offensive content, limiting their ability to create “dark humor”. The inherent limitations of AI in understanding the nuances of humor, particularly the surprise and incongruity that often make jokes funny, pose a significant obstacle. As one researcher put it, AI is built to predict words, not to create the unexpected twists that make audiences laugh.

Despite these challenges, AI is a great tool for comedians, particularly in streamlining the writing process. However, the consensus amongst the participants remains clear: while AI may be able to help comedians write faster, true originality and comedic flair remain firmly in the realm of human creativity.

😍 Enjoying so far, share it with your friends!

Tools of the Trade ⚒️

Otto.ai: A new way to interact with AI that uses tables instead of chat as the interface. Tables are better for researching multi-step complex areas where the chat interface is ineffective. Otto uses tables to structure tasks. Each column in the table represents a distinct task for an AI agent. You can define the specific prompts and tools for each column, tailoring the agents to your specific needs.

Keak: Automates conversion rate optimization by generating and A/B testing website variations. It works on any website, allowing you to target specific audience segments and continuously improve your model based on previous test data.

Mirascope: A Python toolkit that simplifies building LLM applications, making it feel like writing regular Python code. It integrates easily with tools like FastAPI, supports chaining calls, and makes implementing function calls intuitive.

Awesome LLM Apps: Build awesome LLM apps using RAG for interacting with data sources like GitHub, Gmail, PDFs, and YouTube videos through simple texts. These apps will let you retrieve information, engage in chat, and extract insights directly from content on these platforms.

Hot Takes 🔥

Any given AI startup is doomed to become the opposite of its name ~

Elon MuskI wouldn't be surprised if Claude 3.5 Sonnet takes the top spot (for now) on the leaderboards.

But note the pattern with current models - Gemini, GPT and Claude are now all running smaller, faster, cheaper next-gen models at GPT-4 level, saying bigger versions are coming soon. ~

Ethan Mollick

Meme of the Day 🤡

That’s all for today! See you tomorrow with more such AI-filled content.

Real-time AI Updates 🚨

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: We curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

Reply