- unwind ai

- Posts

- Architecture Behind Manus AI Agent

Architecture Behind Manus AI Agent

PLUS: Standardized codebases for agentic systems, Qwen multimodal reasoning model

Today’s top AI Highlights:

CodeAct: AI Agents write and execute Python code on-the-fly to complete tasks

Free ready-made backend templates for agent systems in production

Give your Cursor AI agent a persistent memory with this MCP server

Qwen’s opensource model that can reason over text, images, and videos

Browserbase MCP server for LLMs to autonomously use a browser

& so much more!

Read time: 3 mins

AI Tutorials

We've been stuck in text-based AI interfaces for too long. Sure, they work, but they're not the most natural way humans communicate. Now, with OpenAI's new Agents SDK and their recent text-to-speech models, we can build voice applications without drowning in complexity or code.

In this tutorial, we'll build a Multi-agent Voice RAG system that speaks its answers aloud. We'll create a multi-agent workflow where specialized AI agents handle different parts of the process - one agent focuses on processing documentation content, another optimizes responses for natural speech, and finally OpenAI's text-to-speech model delivers the answer in a human-like voice.

Our RAG app uses OpenAI Agents SDK to create and orchestrate these agents that handle different stages of the workflow. OpenAI’s new speech model GPT-4o-mini TTS enhances the overall user experience with a natural, emotion-rich voice. You can easily steer its voice characteristics like the tone, pacing, emotion, and personality traits with simple natural language instructions.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

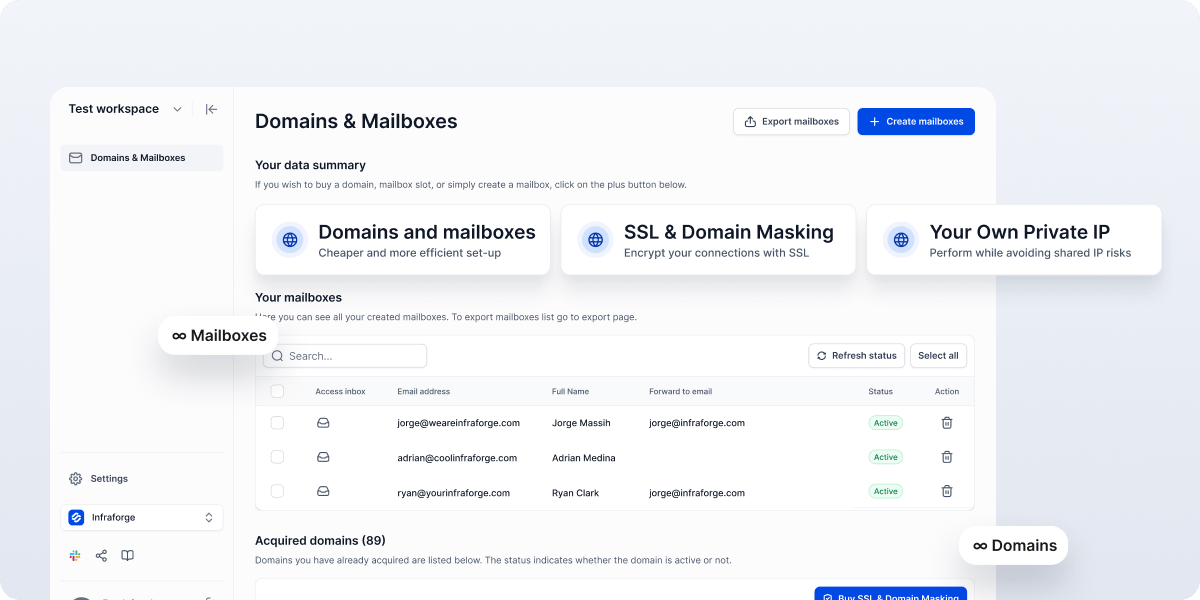

Agno has launched Workspaces, an open-source toolkit that eliminates the infrastructure hurdles when building production-ready AI agent systems. Workspaces are standardized codebases for production Agentic Systems that contain a RestAPI (FastAPI) for serving agents, teams, and workflows, a Streamlit application for testing, and a Postgres database for session and vector storage.

Workspaces are set up to run locally using Docker and be easily deployed to AWS. What makes this significant is that it handles the entire operational layer, allowing you to focus on agent logic rather than DevOps setup.

Key Highlights:

Complete agent infrastructure stack - Includes a FastAPI backend for serving agents and workflows, Postgres for session data and vector storage, and a Streamlit admin interface for testing and evaluation, all preconfigured to work together.

Deployment options - Local development runs using Docker with simple workspace commands, while production deployment to AWS is handled through the same CLI with proper infrastructure management and secrets control.

Multiple workspace templates - Choose between Agent App (full stack with admin interface) or Agent API (backend only) templates depending on project requirements, with both supporting agent teams and complex workflows.

Database integration - Preconfigured SQLAlchemy and Alembic setup handles migrations, with support for knowledge bases, session storage, and agent state persistence while maintaining high performance at scale.

The first search engine for leads

Leadsforge is the very first search engine for leads. With a chat-like, easy interface, getting new leads is as easy as texting a friend! Just describe your ideal customer in the chat - industry, role, location, or other specific criteria - and our AI-powered search engine will instantly find and verify the best leads for you. No more guesswork, just results. Your lead lists will be ready in minutes!

Manus AI has impressed all of us with its autonomous task execution. You might have heard people criticising it for being a mere wrapper around Claude 3.5 Sonnet and fine-tuned Qwen models. But it’s much more than that. This multi-agent system is based on CodeAct architecture, a powerful alternative to traditional tool-calling approaches for AI agents.

CodeAct equips AI agents with a Python Interpreter to write and execute Python code instead of making JSON function calls with built-in tool calling parameters, making them more efficient at solving complex problems. It gives agents the full power of Python to combine tools, maintain state, and process multiple inputs in a single step. It has demonstrated up to 20% higher success rates in benchmark tests.

Key Highlights:

Flexible Tool Combination & Logic - CodeAct lets your agent create intricate Python code to orchestrate tools in complex sequences, opening up possibilities for advanced workflows and adaptive decision-making. Think on-the-fly data transformation between tools and conditional branching based on results.

Built-In Self-Debugging & Error Recovery - CodeAct leverages Python's robust error handling. When things go wrong, the agent can analyze error messages, adapt its code, and retry, leading to more resilient and autonomous task completion – a massive benefit for real-world deployment.

Python's Extensive Ecosystem - CodeAct can make use of the vast library of Python modules to achieve various automation requirements, instead of being limited by custom-defined and specific tools. Imagine your agents interacting with APIs, performing complex data manipulation, or controlling external systems – all within a unified framework.

Implement using LangGraph - The langgraph-codeact library implements CodeAct agents within LangGraph, offering message history persistence, variable management, and a customizable sandbox for secure code execution. Install it with

pip install langgraph-codeact. You can use this with any model supported by LangChain.

Quick Bites

Alibaba Qwen team has officially released QVQ-Max multimodal reasoning model, previewed earlier in December, that can not only “understand” the content in images and videos but also analyze and reason with this information to give answers. The model is great at parsing images, even pointing out small details that you might overlook. This makes it the first open-weights model that can reason over images and videos. Available on GitHub, Hugging Face and Modelscope, you can try it for free on Qwen Chat.

Cognition just launched "Devin Search" – a tool to help developers quickly understand codebases. It has two options: regular mode for fast answers about how features are built, and Deep Mode for complex questions like explaining system architecture. You can share search results with teammates and jump straight into Devin sessions from any search result. It’s available to all users.

Zep AI just released a Graphiti MCP server that gives Cursor IDE persistent memory. Now your Cursor AI agent can remember your coding preferences and project specs between sessions without starting from scratch every time. The setup is pretty straightforward – configure Cursor as an MCP client, and Graphiti handles the knowledge graph that tracks how your requirements evolve over time.

Tools of the Trade

Browserbase MCP server: Allows LLMs to control cloud-based web browsers through Browserbase and Stagehand, enabling AI to perform actions like navigating websites, capturing screenshots, extracting data, and executing JavaScript without local browser installation.

Kilo Code: An open-source AI agent VS Code extension that generates code, automates tasks, and provides suggestions to help you code more efficiently. It offers $15 worth of free Claude 3.7 Sonnet tokens with signup (no credit card needed)

HyperPilot: A free playground to test and compare AI browser agents like OpenAI Operator and Claude Computer Use without the typical cost barriers or setup complexity. It's built on Hyperbrowser's infrastructure which enables you to integrate these browser agents into your own applications with just an API and 5 lines of code.

Awesome LLM Apps: Build awesome LLM apps with RAG, AI agents, and more to interact with data sources like GitHub, Gmail, PDFs, and YouTube videos, and automate complex work.

Hot Takes

The reality of building web apps in 2025 is that it's a bit like assembling IKEA furniture. There's no "full-stack" product with batteries included, you have to piece together and configure many individual services:

- frontend / backend (e.g. React, Next.js, APIs)

- hosting (cdn, https, domains, autoscaling)

- database

- authentication (custom, social logins)

- blob storage (file uploads, urls, cdn-backed)

- email

- payments

- background jobs

- analytics

- monitoring

- dev tools (CI/CD, staging)

- secrets

- ...

I'm relatively new to modern web dev and find the above a bit overwhelming, e.g. I'm embarrassed to share it took me ~3 hours the other day to create and configure a supabase with a vercel app and resolve a few errors. The second you stray just slightly from the "getting started" tutorial in the docs you're suddenly in the wilderness. It's not even code, it's... configurations, plumbing, orchestration, workflows, best practices. A lot of glory will go to whoever figures out how to make it accessible and "just work" out of the box, for both humans and, increasingly and especially, AIs. ~

Andrej KarpathyAGI = All Ghibli Images? ~

AK

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply